最近有空,做一些关于 Redis 集群部署的实验,以此记录。

Redis 的集群部署主要有以下三种模式,每种模式适用于不同的场景。以下是详细说明及基于 Docker 的部署实践指南:

一、Redis 集群部署模式对比

| 模式 |

主从复制 |

Sentinel 哨兵 |

Cluster 分片集群 |

| 核心功能 |

数据冗余、读写分离 |

主从复制 + 自动故障转移 |

数据分片 + 高可用 + 自动扩容 |

| 数据一致性 |

异步复制(可能丢数据) |

异步复制 |

异步复制,哈希槽分区 |

| 适用场景 |

读多写少,容灾备份 |

高可用场景(主节点自动切换) |

大数据量、高并发、横向扩展 |

| 节点数量 |

至少 1 主 + 1 从 |

至少 1 主 + 1 从 + 3 Sentinel |

至少 3 主 + 3 从(6 节点) |

| 客户端兼容性 |

需手动切换主节点 |

客户端需支持 Sentinel 协议 |

需支持 Cluster 协议 |

二、Docker 部署三种集群模式

以下使用 docker-compose.yml 快速搭建环境(需提前安装 Docker 和 docker-compose)。

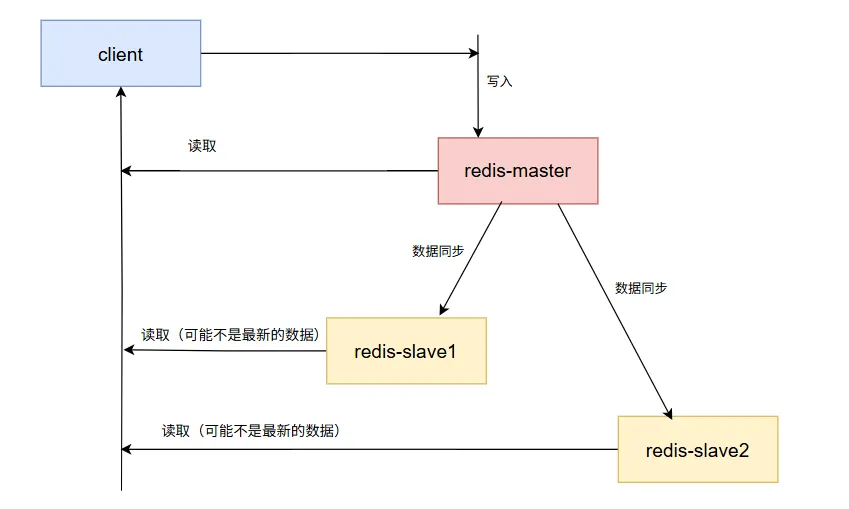

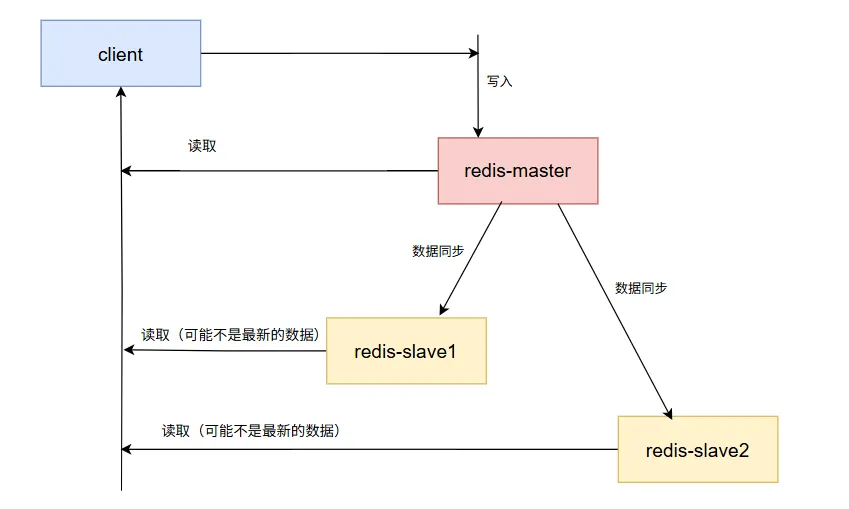

1. 主从复制模式 (Replication)

- 生产节点数:1 主 + 2 从(提升读性能和容灾能力)

- 适用场景:读多写少、数据冷备

文件目录

创建测试目录 master-slave-mode,文件树如下:

1

2

3

4

5

6

|

master-slave-mode/

├── docker-compose.yml

├── data/

├── master/

├── slave1/

└── slave2/

|

创建数据目录:

1

2

3

|

mkdir -p data/{master,slave1,slave2}

# 或使用更精细的权限控制

chmod -R 777 data/*

|

docker compose 配置

写入下述 docker-compose.yml配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

services:

redis-master:

image: redis:7.0

command: redis-server --requirepass 123456

ports: ["6379:6379"]

volumes: ["./data/master:/data"]

redis-slave1:

image: redis:7.0

command: redis-server --replicaof redis-master 6379 --requirepass 123456 --masterauth 123456

depends_on: [redis-master]

volumes: ["./data/slave1:/data"]

redis-slave2:

image: redis:7.0

command: redis-server --replicaof redis-master 6379 --requirepass 123456 --masterauth 123456

depends_on: [redis-master]

volumes: ["./data/slave2:/data"]

|

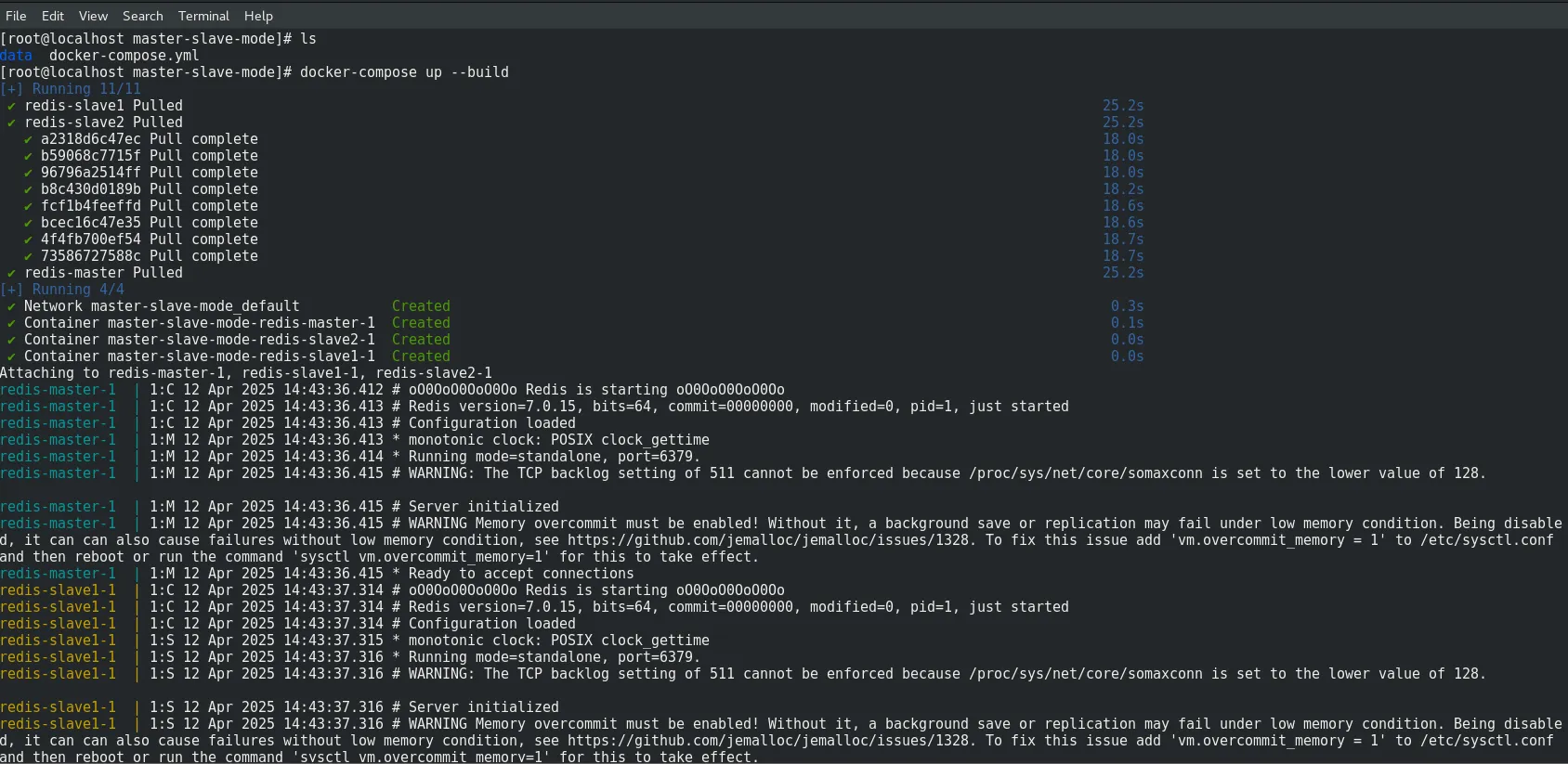

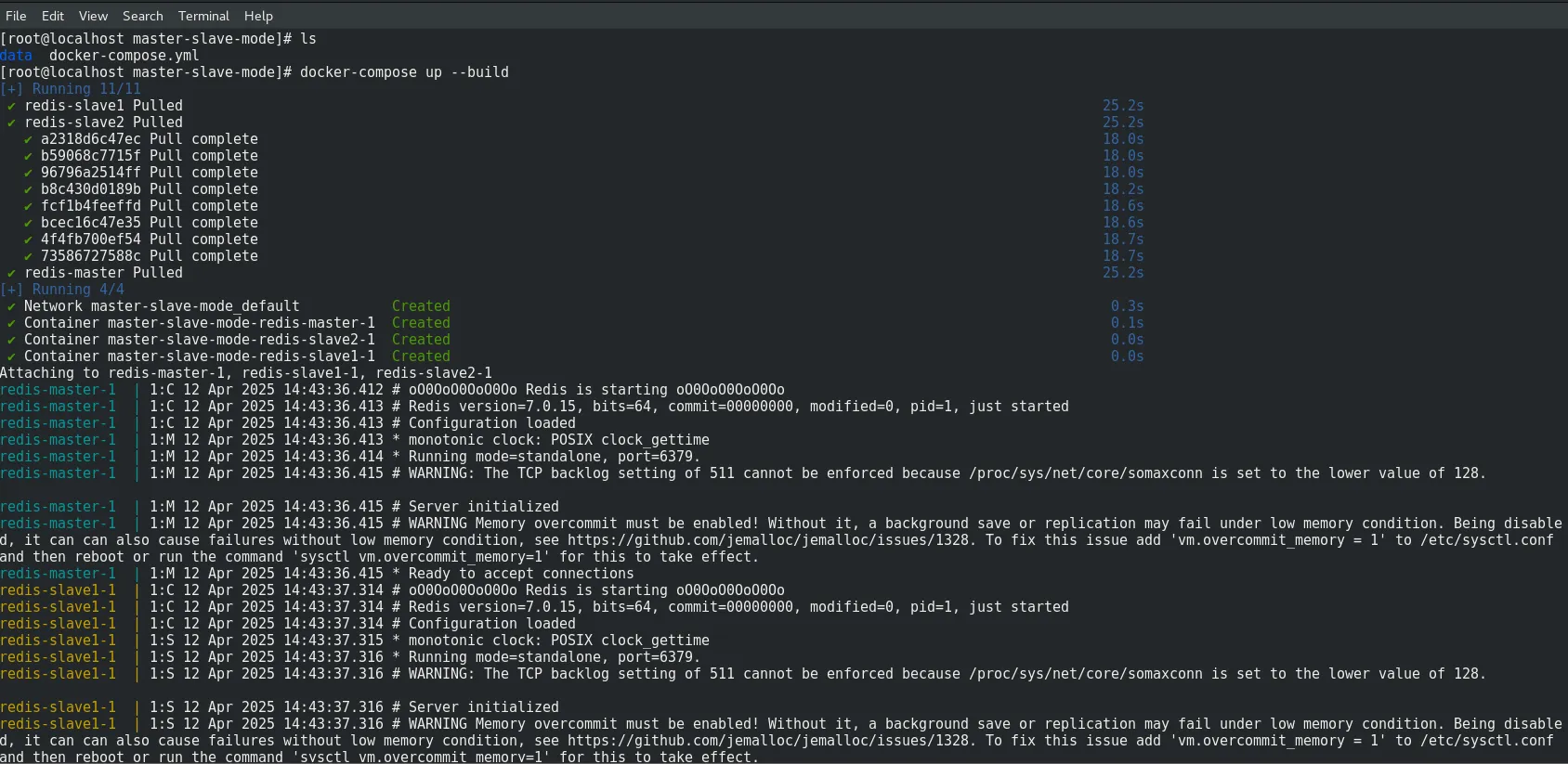

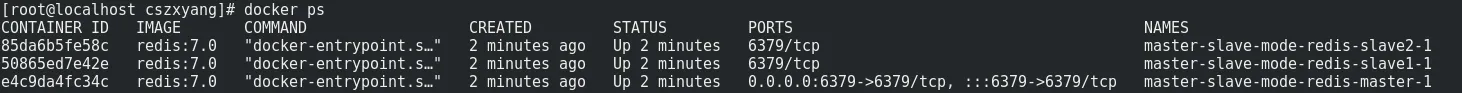

启动集群

1

|

docker-compose up --build

|

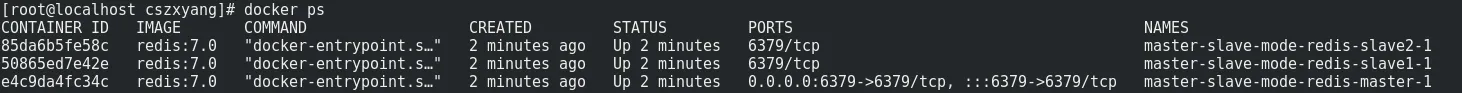

一主两从集群搭建成功,三个节点如下

特性验证

- 读写分离验证:主节点写入,从节点读取

- 数据同步延迟:主节点写入后,观察从节点数据可见延迟

- 主节点宕机恢复:手动提升从节点为主节点

测试 1 - 数据同步延迟

1

2

3

4

5

6

7

8

9

10

11

|

# 写入主节点

docker exec -it master-slave-mode-redis-master-1 redis-cli -a 123456 set key1 "master"

# 从节点读取(禁止写入)

# 返回 "master"

docker exec -it master-slave-mode-redis-slave1-1 redis-cli -a 123456 get key1

# 报错 READONLY

docker exec -it master-slave-mode-redis-slave1-1 redis-cli -a 123456 set key2 "slave"

# 从节点读取(观察延迟)

docker exec master-slave-mode-redis-slave1-2 redis-cli -a 123456 --latency -i 1 get key1

|

异步复制下,从节点数据可见延迟通常在 1~100ms,网络抖动时可能更高。

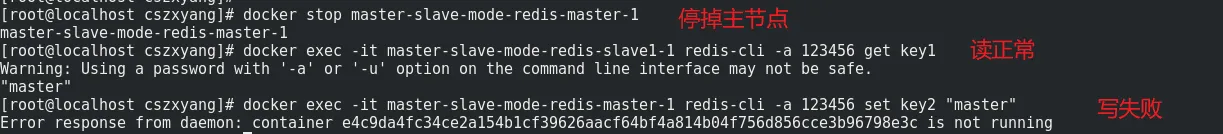

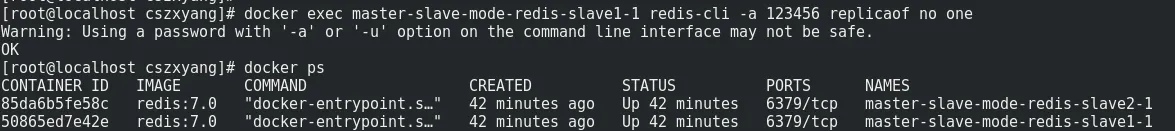

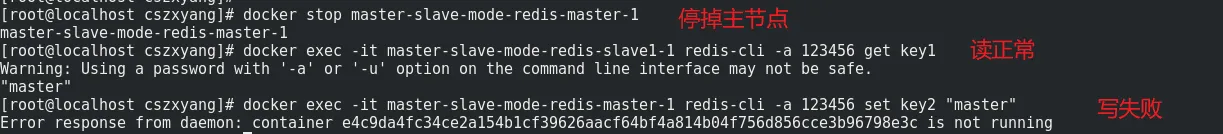

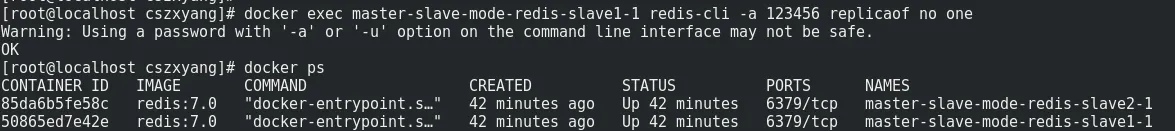

测试 2 - 主节点宕机恢复

1

2

3

4

|

docker stop master-slave-mode-redis-master-1

docker exec master-slave-mode-redis-slave1-1 redis-cli -a 123456 replicaof no one # 手动提升为 master

docker exec -it master-slave-mode-redis-slave1-1 redis-cli -a 123456 INFO replication

|

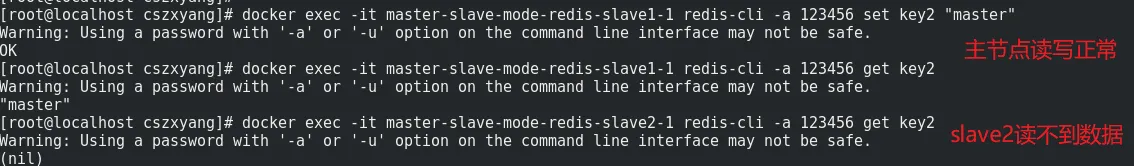

停掉 master 后,从节点读取正常,没法写入了

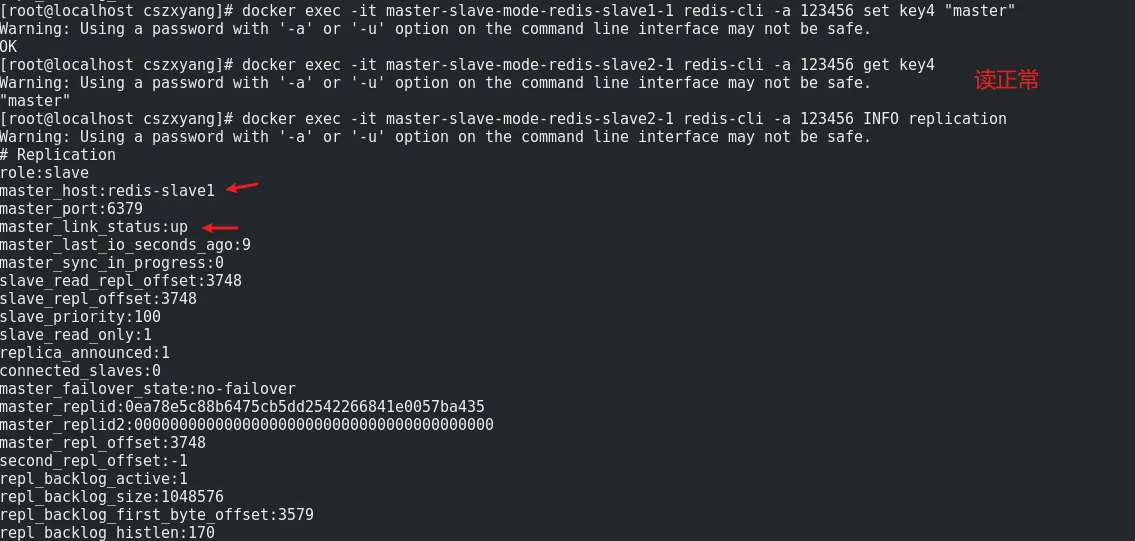

将 salve1 设置成主节点

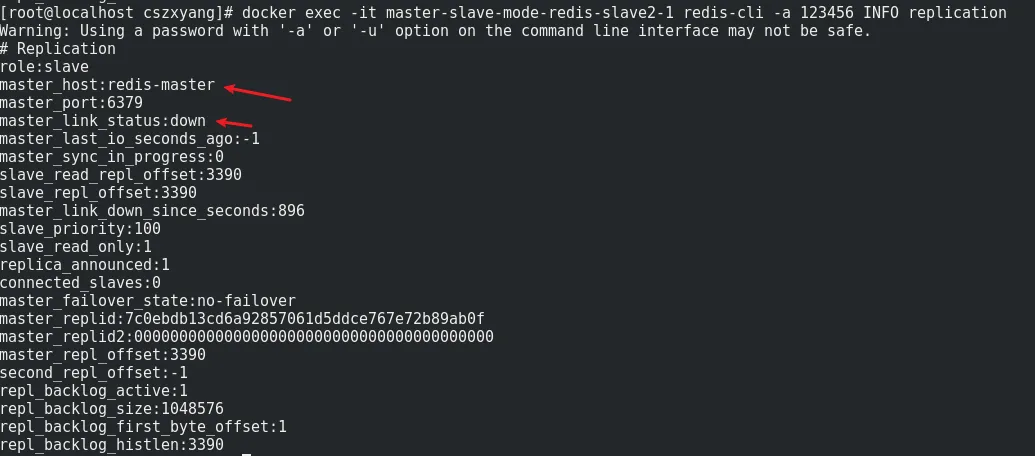

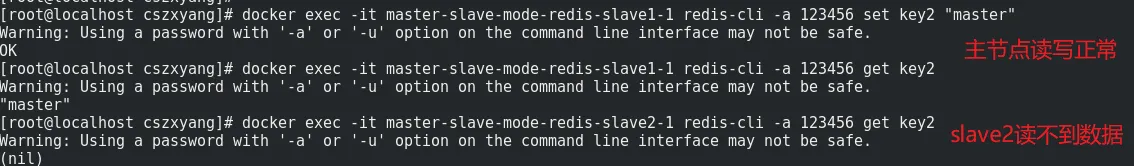

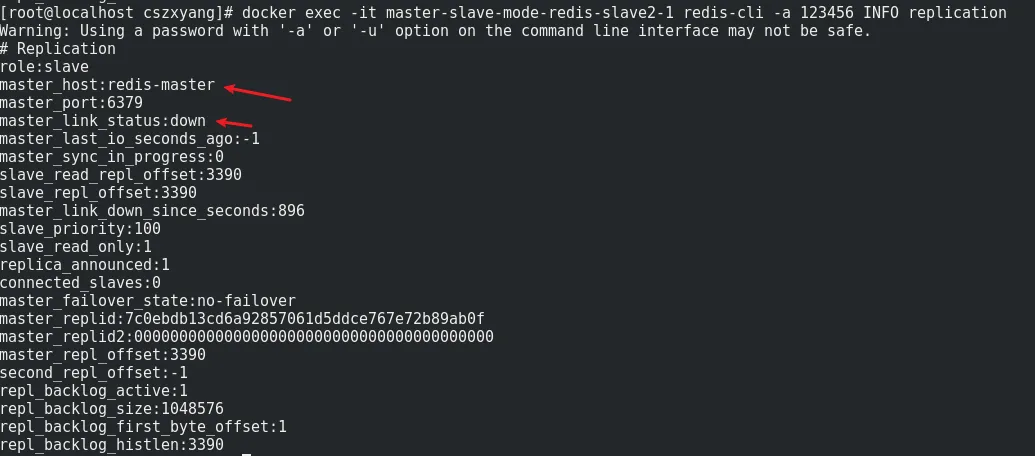

发现 slave1 读写正常,但是 salve2 没读到 salve1 的数据,这是因为 salve2 也需要i调整主节点指向

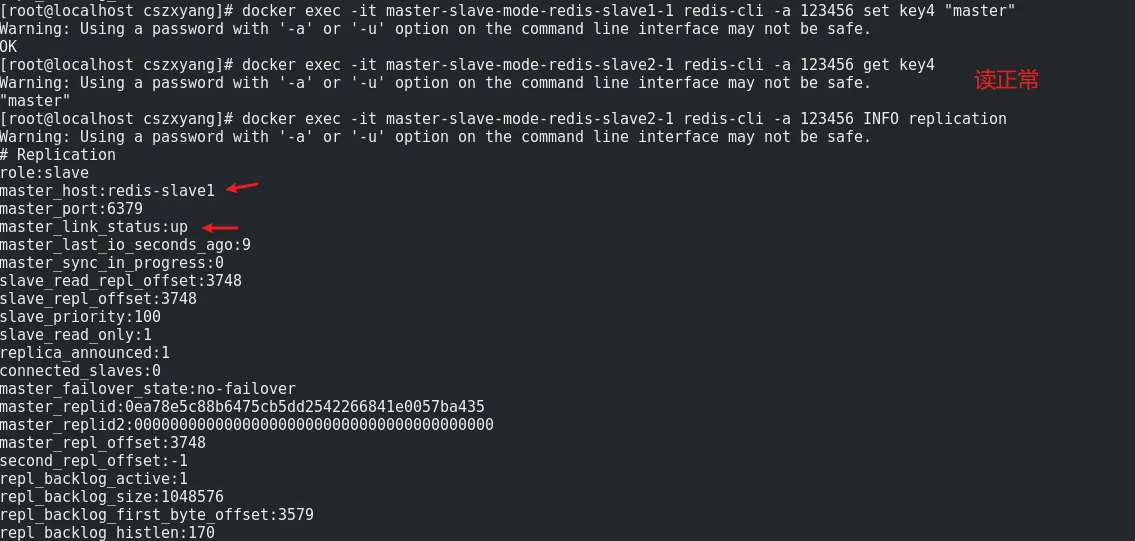

将 slave2 节点的主节点也指向 salve1

1

|

docker exec -it master-slave-mode-redis-slave1-1 redis-cli -a 123456 REPLICAOF redis-slave1 6379

|

效果:需人工干预,服务中断时间取决于运维响应速度。

2. Sentinel 哨兵模式

主从模式中,主节点宕机后需要人为介入,十分不方便,为此引入了哨兵,在主节点下线后时自动选择出新的主节点。

- 生产节点数:3 Sentinel + 1 主 + 2 从(满足法定投票数)

- 适用场景:高可用、自动故障转移

- **redis 版本:**redis 7

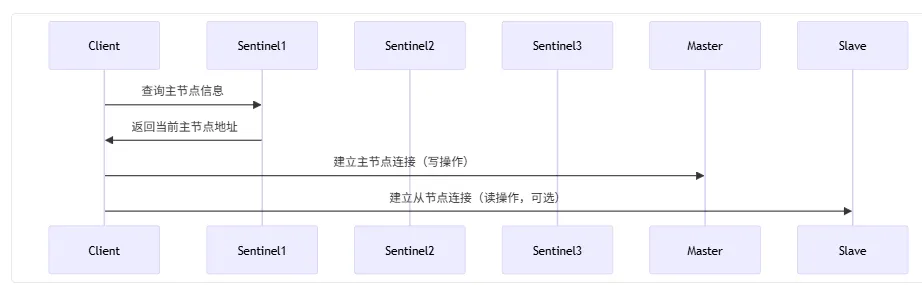

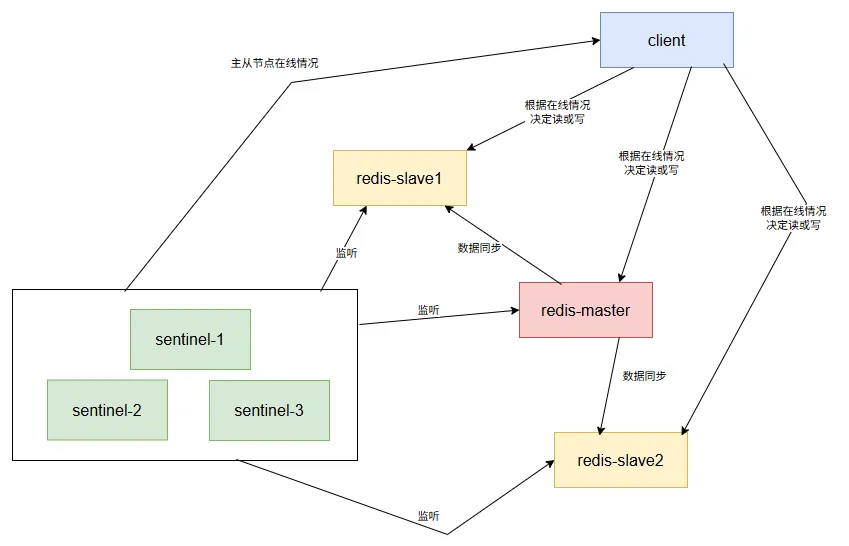

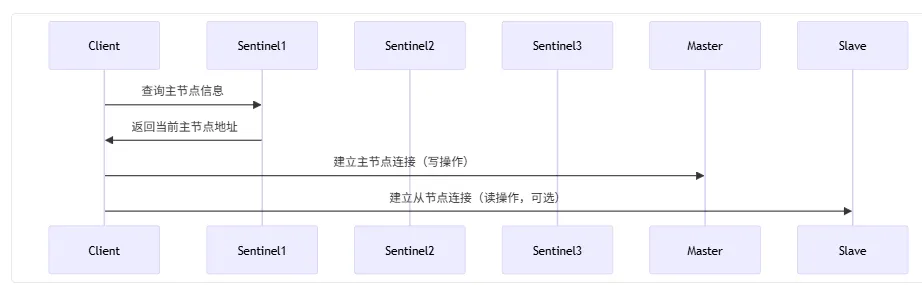

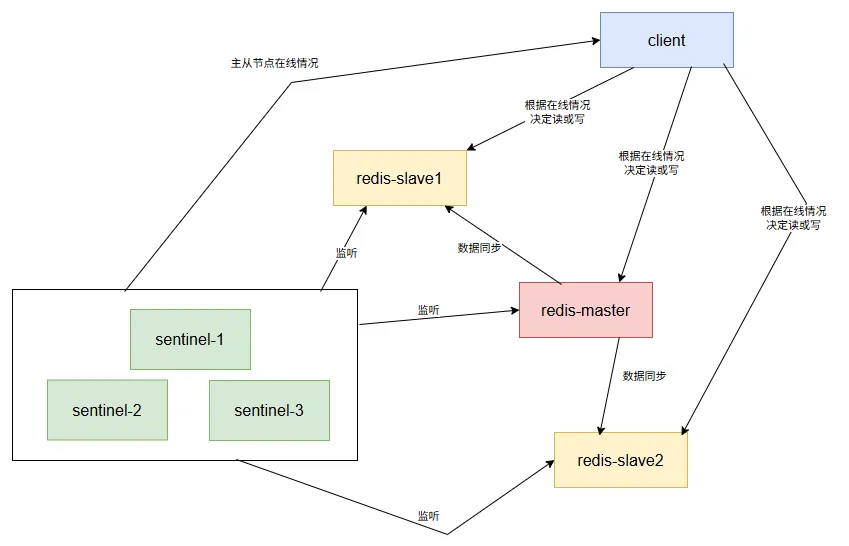

下面是客户端与哨兵集群、主从节点的主要连接过程:

哨兵集群是独立于主从数据集群的,它们负责监听主从节点的在线情况,告诉客户端可用的节点,客户端再依此动态连接到不同的节点进行读写。

文件目录

创建测试目录 sentinel-mode,文件树如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

sentinel-mode/

├── docker-compose.yml

├── redis/

│ ├── master/

│ │ ├── data/ # 持久化数据目录

│ │ └── redis.conf

│ ├── slave1/

│ │ ├── data/

│ │ └── redis.conf

│ └── slave2/

│ ├── data/

│ └── redis.conf

└── sentinel/

├── sentinel1.conf

├── sentinel2.conf

└── sentinel3.conf

|

上述主从模式没有将配置文件挂载到宿主机,这里尝试将数据跟配置都从容器中独立出来,避免丢失:

创建数据目录:

1

2

3

|

mkdir -p redis/{master,slave1,slave2}/data

# 或使用更精细的权限控制

chmod -R 777 redis/*/data

|

redis.conf 配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

# 主节点配置:redis/master/redis.conf

port 6379

bind 0.0.0.0

dir /data

requirepass 123456

masterauth 123456

appendonly yes

appendfsync everysec

# 从节点配置:redis/slave1/redis.conf

port 6379

bind 0.0.0.0

dir /data

requirepass 123456

masterauth 123456

replicaof redis-master 6379

replica-serve-stale-data yes

appendonly yes

# 从节点配置:redis/slave2/redis.conf

port 6379

bind 0.0.0.0

dir /data

requirepass 123456

masterauth 123456

replicaof redis-master 6379

replica-serve-stale-data yes

appendonly yes

|

哨兵配置

编辑 sentinel/sentinel*.conf 文件

1

2

3

4

5

6

7

8

9

|

port 26379

sentinel monitor mymaster redis-master 6379 2

sentinel auth-pass mymaster 123456

sentinel down-after-milliseconds mymaster 5000

sentinel failover-timeout mymaster 10000

sentinel parallel-syncs mymaster 1

sentinel deny-scripts-reconfig yes

sentinel resolve-hostnames yes

sentinel announce-hostnames yes

|

配置解释:

sentinel monitor mymaster redis-master 6379 2

- 监控名为

mymaster 的主节点

- 主节点地址为

redis-master:6379(使用 Docker 服务名)

2 表示至少需要 2 个哨兵同意才能触发故障转移

sentinel auth-pass mymaster mypassword

- 访问受密码保护的 Redis 实例时使用的认证密码

sentinel down-after-milliseconds mymaster 5000

sentinel failover-timeout mymaster 10000

sentinel parallel-syncs mymaster 1

sentinel resolve-hostnames 和 sentinel announce-hostnames:

- 开启域名解析,哨兵可以通过 redis-master 容器名找到 master 节点

docker-compose 配置

写入下述 docker-compose.yml配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

|

services:

redis-master:

image: redis:7

container_name: redis-master

command: redis-server /usr/local/etc/redis/redis.conf

volumes:

- ./redis/master/redis.conf:/usr/local/etc/redis/redis.conf

- ./redis/master/data:/data

networks:

- redis-net

ports:

- "6379:6379"

redis-slave1:

image: redis:7

container_name: redis-slave1

command: redis-server /usr/local/etc/redis/redis.conf

volumes:

- ./redis/slave1/redis.conf:/usr/local/etc/redis/redis.conf

- ./redis/slave1/data:/data

networks:

- redis-net

depends_on:

- redis-master

redis-slave2:

image: redis:7

container_name: redis-slave2

command: redis-server /usr/local/etc/redis/redis.conf

volumes:

- ./redis/slave2/redis.conf:/usr/local/etc/redis/redis.conf

- ./redis/slave2/data:/data

networks:

- redis-net

depends_on:

- redis-master

sentinel1:

image: redis:7

container_name: sentinel1

command: redis-sentinel /usr/local/etc/redis/sentinel.conf

volumes:

- ./sentinel/sentinel1.conf:/usr/local/etc/redis/sentinel.conf

networks:

- redis-net

ports:

- "26379:26379"

depends_on:

- redis-master

sentinel2:

image: redis:7

container_name: sentinel2

command: redis-sentinel /usr/local/etc/redis/sentinel.conf

volumes:

- ./sentinel/sentinel2.conf:/usr/local/etc/redis/sentinel.conf

networks:

- redis-net

depends_on:

- redis-master

sentinel3:

image: redis:7

container_name: sentinel3

command: redis-sentinel /usr/local/etc/redis/sentinel.conf

volumes:

- ./sentinel/sentinel3.conf:/usr/local/etc/redis/sentinel.conf

networks:

- redis-net

depends_on:

- redis-master

networks:

redis-net:

driver: bridge

|

- 自定义名为

redis-net的网络,所有节点都在这个网络下,都能连通

- 对于主从节点,将配置和数据挂载到宿主机

- 各个节点在主节点启动后启动

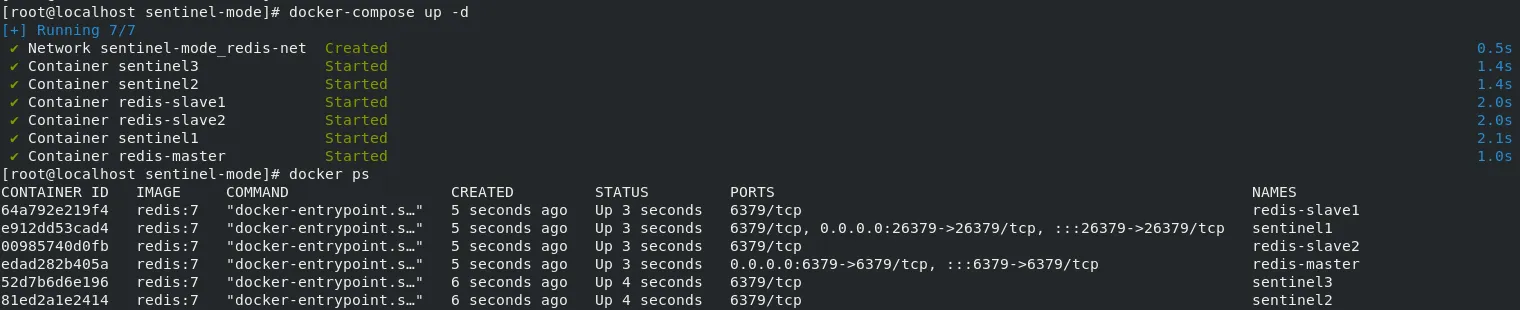

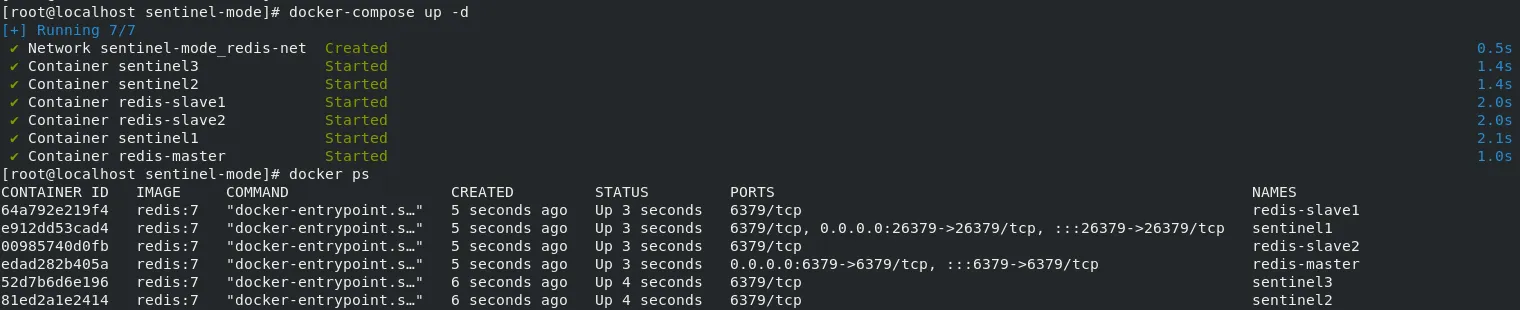

启动集群

关停集群并删除数据

1

2

3

4

5

|

# 停止并删除旧容器

docker-compose down

# 清理可能存在的旧数据

rm -rf redis/*/data/*

|

特性验证

- 自动故障转移:杀死主节点,观察 Sentinel 选举新主节点时间

- 客户端重定向:使用支持 Sentinel 的客户端(如 Jedis)测试自动切换

- 网络分区恢复:模拟网络中断后验证数据一致性

先看下网络情况

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

|

$ docker network inspect sentinel-mode_redis-net

[

{

"Name": "sentinel-mode_redis-net",

"Containers": {

"5cb420ef5a4dc4afd5c4c0d5928693c8f80810ab7278b6bc5dfb7ed68abaa7ee": {

"Name": "redis-slave1",

"MacAddress": "02:42:ac:1b:00:06",

"IPv4Address": "172.27.0.6/16",

"IPv6Address": ""

},

"890735847cf0be3a78b14792287b45d0aba83e574019c0c13943c7d8558b741f": {

"Name": "sentinel1",

"MacAddress": "02:42:ac:1b:00:05",

"IPv4Address": "172.27.0.5/16",

"IPv6Address": ""

},

"8c2a12501beccce209735aff4b88c2663e02f70b6acb91ef9a02697def1d0322": {

"Name": "sentinel3",

"MacAddress": "02:42:ac:1b:00:03",

"IPv4Address": "172.27.0.3/16",

"IPv6Address": ""

},

"a97c2d77ffeda7a1286ff8925c35f4199a40d1e15f3e2794e5651c8d05dc6086": {

"Name": "sentinel2",

"MacAddress": "02:42:ac:1b:00:04",

"IPv4Address": "172.27.0.4/16",

"IPv6Address": ""

},

"dacb73d889f3ccc45029eb0e5b5e9e3dbed17a492424bf8850cef165ca575bdc": {

"Name": "redis-master",

"MacAddress": "02:42:ac:1b:00:02",

"IPv4Address": "172.27.0.2/16",

"IPv6Address": ""

},

"e5450d90898f08dda7b6d0316b7ff76b1e36fd35957bc8185969573b07bf6a1b": {

"Name": "redis-slave2",

"MacAddress": "02:42:ac:1b:00:07",

"IPv4Address": "172.27.0.7/16",

"IPv6Address": ""

}

},

}

]

|

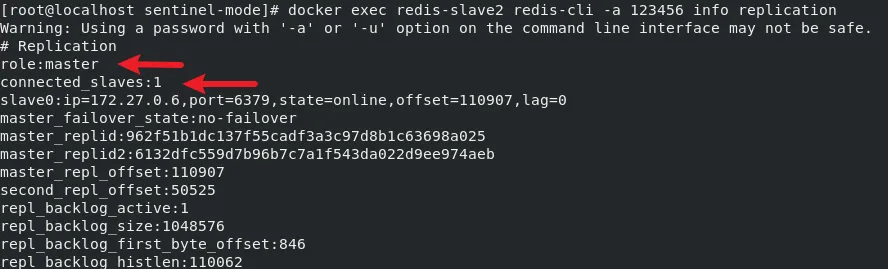

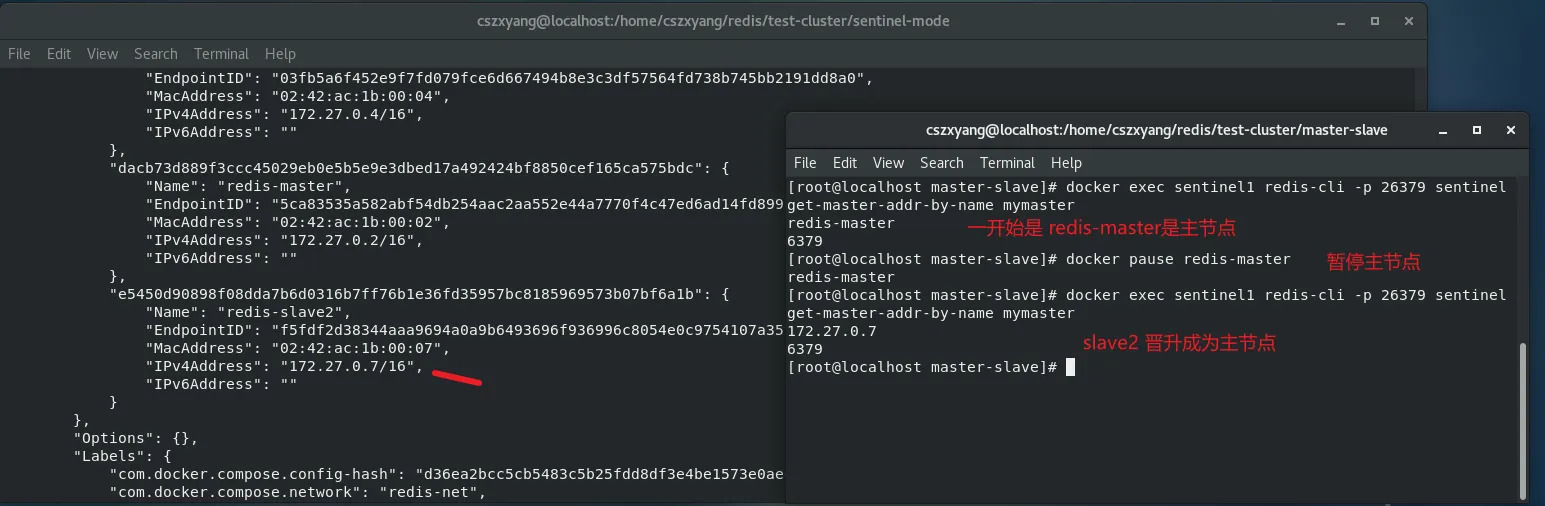

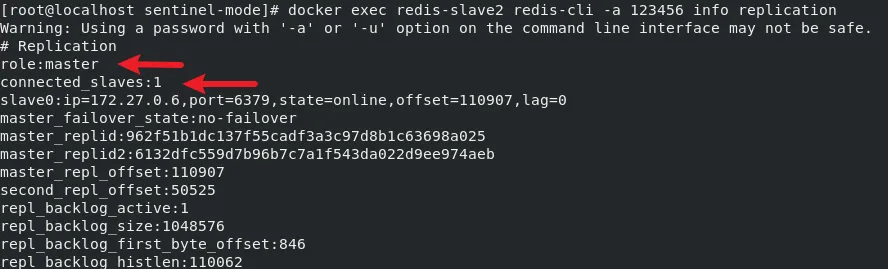

验证1:查看主从关系

主节点信息如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

$ docker exec redis-master redis-cli -a 123456 info replication

# Replication

role:master

connected_slaves:2

slave0:ip=172.27.0.6,port=6379,state=online,offset=32739,lag=0

slave1:ip=172.27.0.7,port=6379,state=online,offset=32739,lag=0

master_failover_state:no-failover

master_replid:6132dfc559d7b96b7c7a1f543da022d9ee974aeb

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:32739

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:32739

|

从节点信息如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

$ docker exec redis-slave1 redis-cli -a 123456 info replication

# Replication

role:slave

master_host:redis-master

master_port:6379

master_link_status:up

master_last_io_seconds_ago:1

master_sync_in_progress:0

slave_read_repl_offset:37699

slave_repl_offset:37699

slave_priority:100

slave_read_only:1

replica_announced:1

connected_slaves:0

master_failover_state:no-failover

master_replid:6132dfc559d7b96b7c7a1f543da022d9ee974aeb

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:37699

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:846

repl_backlog_histlen:36854

|

验证2:查看哨兵信息

1

2

3

4

5

6

7

8

9

|

$ docker exec sentinel1 redis-cli -p 26379 INFO sentinel

# Sentinel

sentinel_masters:1

sentinel_tilt:0

sentinel_tilt_since_seconds:-1

sentinel_running_scripts:0

sentinel_scripts_queue_length:0

sentinel_simulate_failure_flags:0

master0:name=mymaster,status=ok,address=redis-master:6379,slaves=2,sentinels=3

|

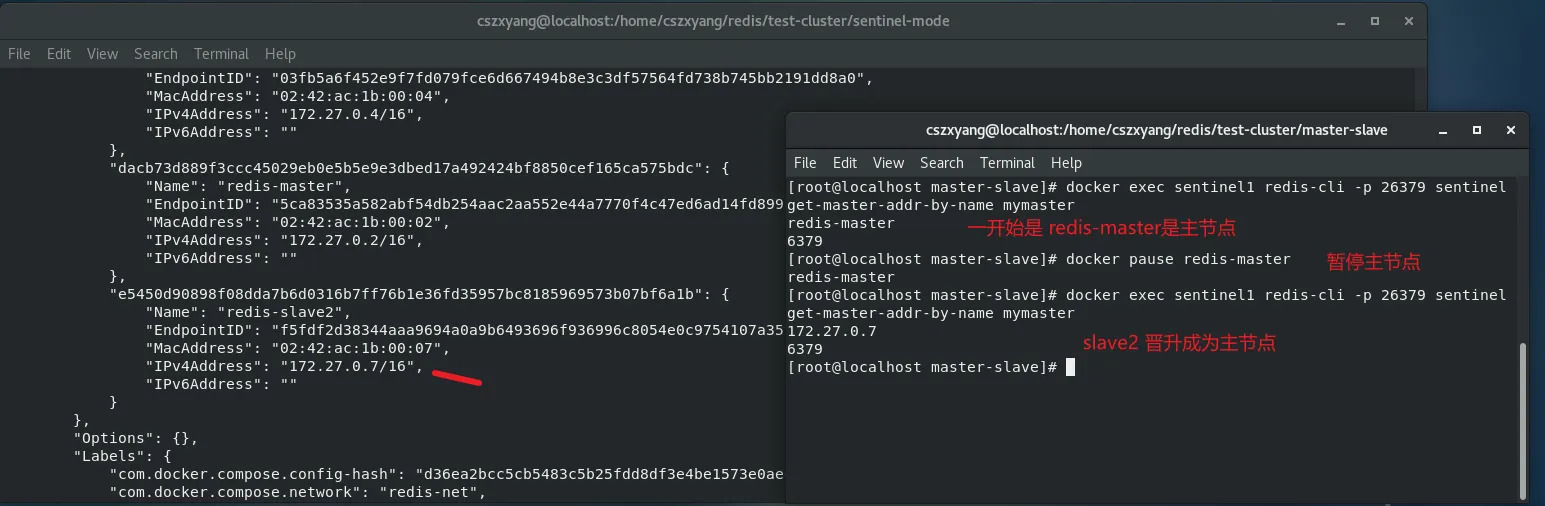

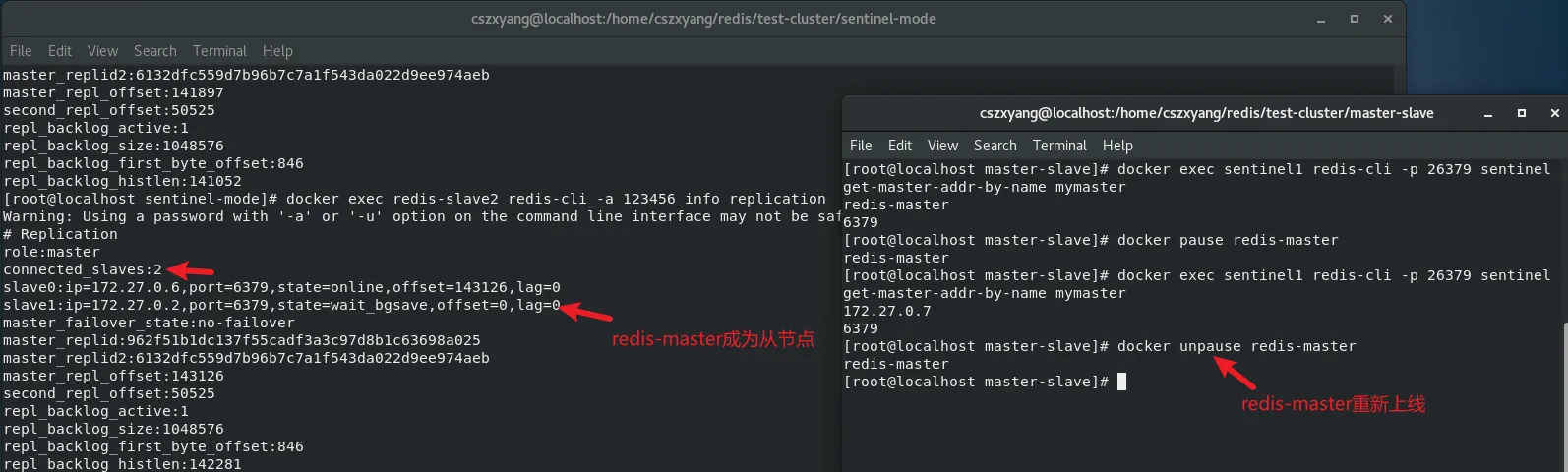

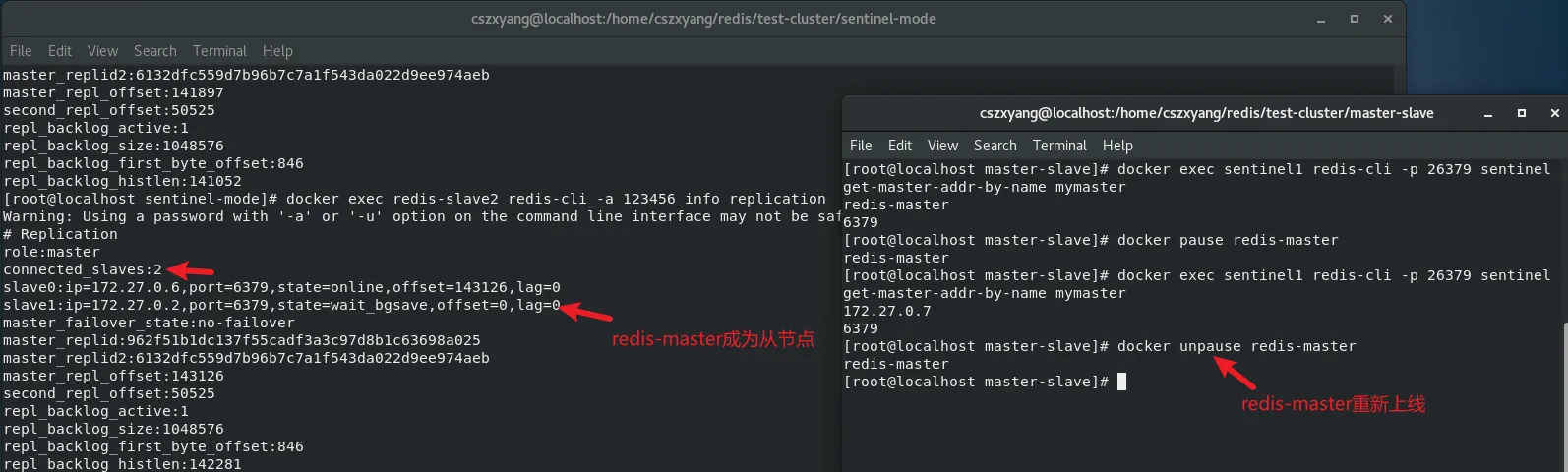

验证3:测试高可用

模拟主节点故障,测试服务是否正常

1

2

3

4

5

6

7

8

|

# 暂停主节点容器

docker pause redis-master

# 等待约15秒后查看新主节点

docker exec sentinel1 redis-cli -p 26379 sentinel get-master-addr-by-name mymaster

# 恢复原主节点(将变为从节点)

docker unpause redis-master

|

redis-master重新上线,变为从节点

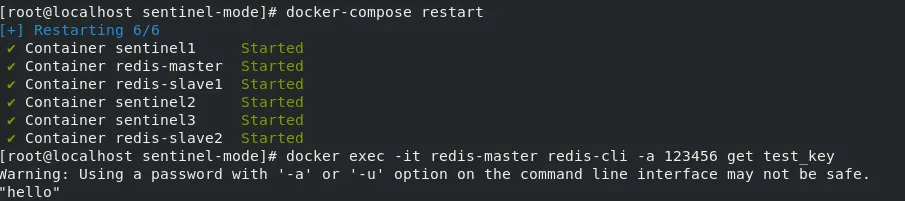

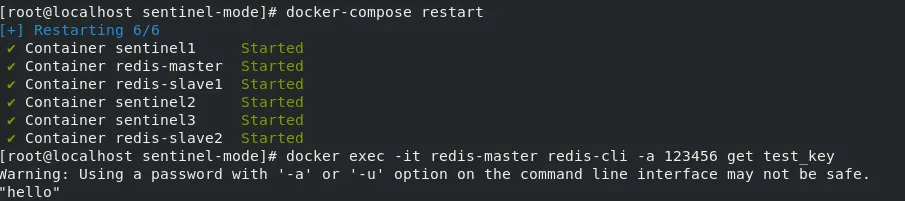

验证4:持久化

1

2

3

4

5

6

7

|

# 1. 写入测试数据

docker exec -it redis-master redis-cli -a 123456 set test_key "hello"

# 2. 强制重启集群

docker-compose restart

# 3. 验证数据存在

docker exec -it redis-master redis-cli -a 123456 get test_key

# 应返回 "hello"

|

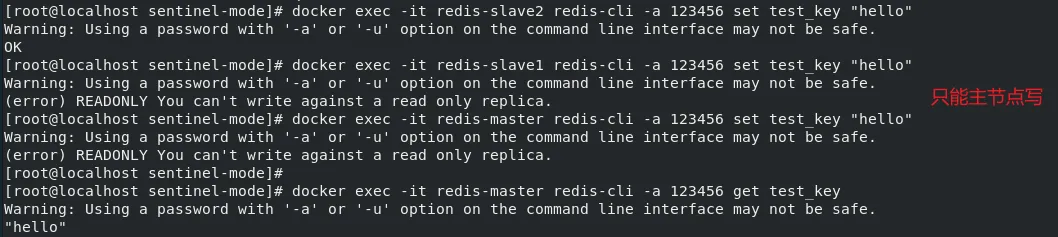

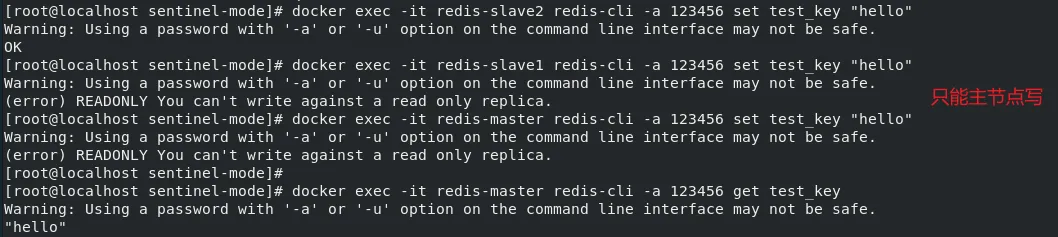

跟普通的主从模式一样,哨兵模式下的主从节点也是读写分离的,需要往主节点写数据,从节点是只读的。

重启集群后数据仍然存在

3. Cluster 分片集群

至少需要三主三从

目录结构

1

2

3

4

5

6

7

8

9

|

cluster-mode/

├── docker-compose.yml # 核心编排文件

├── data/ # 数据持久化目录

├── node1/ # 节点1数据

├── node2/ # 节点2数据

├── node3/ # 节点3数据

├── node4/ # 节点4数据

├── node5/ # 节点5数据

└── node6/ # 节点6数据

|

创建目录

1

|

mkdir -p ./data/{node1,node2,node3,node4,node5,node6}

|

docker-compose 配置

写入下述 docker-compose.yml配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

|

services:

redis-node-1:

image: redis:7.0

container_name: redis-node-1

command: redis-server --cluster-enabled yes --cluster-config-file nodes.conf --cluster-node-timeout 5000 --appendonly yes

ports:

- "7001:6379"

volumes:

- ./data/node1:/data

networks:

redis-cluster-net:

ipv4_address: 172.20.0.2

redis-node-2:

image: redis:7.0

container_name: redis-node-2

command: redis-server --cluster-enabled yes --cluster-config-file nodes.conf --cluster-node-timeout 5000 --appendonly yes

ports:

- "7002:6379"

volumes:

- ./data/node2:/data

networks:

redis-cluster-net:

ipv4_address: 172.20.0.3

redis-node-3:

image: redis:7.0

container_name: redis-node-3

command: redis-server --cluster-enabled yes --cluster-config-file nodes.conf --cluster-node-timeout 5000 --appendonly yes

ports:

- "7003:6379"

volumes:

- ./data/node3:/data

networks:

redis-cluster-net:

ipv4_address: 172.20.0.4

redis-node-4:

image: redis:7.0

container_name: redis-node-4

command: redis-server --cluster-enabled yes --cluster-config-file nodes.conf --cluster-node-timeout 5000 --appendonly yes

ports:

- "7004:6379"

volumes:

- ./data/node4:/data

networks:

redis-cluster-net:

ipv4_address: 172.20.0.5

redis-node-5:

image: redis:7.0

container_name: redis-node-5

command: redis-server --cluster-enabled yes --cluster-config-file nodes.conf --cluster-node-timeout 5000 --appendonly yes

ports:

- "7005:6379"

volumes:

- ./data/node5:/data

networks:

redis-cluster-net:

ipv4_address: 172.20.0.6

redis-node-6:

image: redis:7.0

container_name: redis-node-6

command: redis-server --cluster-enabled yes --cluster-config-file nodes.conf --cluster-node-timeout 5000 --appendonly yes

ports:

- "7006:6379"

volumes:

- ./data/node6:/data

networks:

redis-cluster-net:

ipv4_address: 172.20.0.7

networks:

redis-cluster-net:

driver: bridge

ipam:

config:

- subnet: 172.20.0.0/24

|

配置说明:

| 参数 |

说明 |

--cluster-enabled |

必须设置为 yes,以启用集群模式 |

--cluster-node-timeout |

节点不可达的超时时间(单位:毫秒) |

ipam配置 |

固定IP确保节点始终使用相同地址 |

--cluster-replicas 1 |

每个主节点有1个从副本 |

appendonly yes |

启用AOF持久化,确保数据安全 |

docker-compose-scale.yml 配置

扩容,新增节点,再通过命令加入集群,见后续的验证5。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

services:

redis-node-7:

image: redis:7.0

container_name: redis-node-7

command: redis-server --cluster-enabled yes --cluster-config-file nodes.conf --cluster-node-timeout 5000 --appendonly yes

ports:

- "7007:6379"

volumes:

- ./data/node7:/data

networks:

redis-cluster-net:

ipv4_address: 172.20.0.8

networks:

redis-cluster-net:

external: true

name: cluster-mode_redis-cluster-net

|

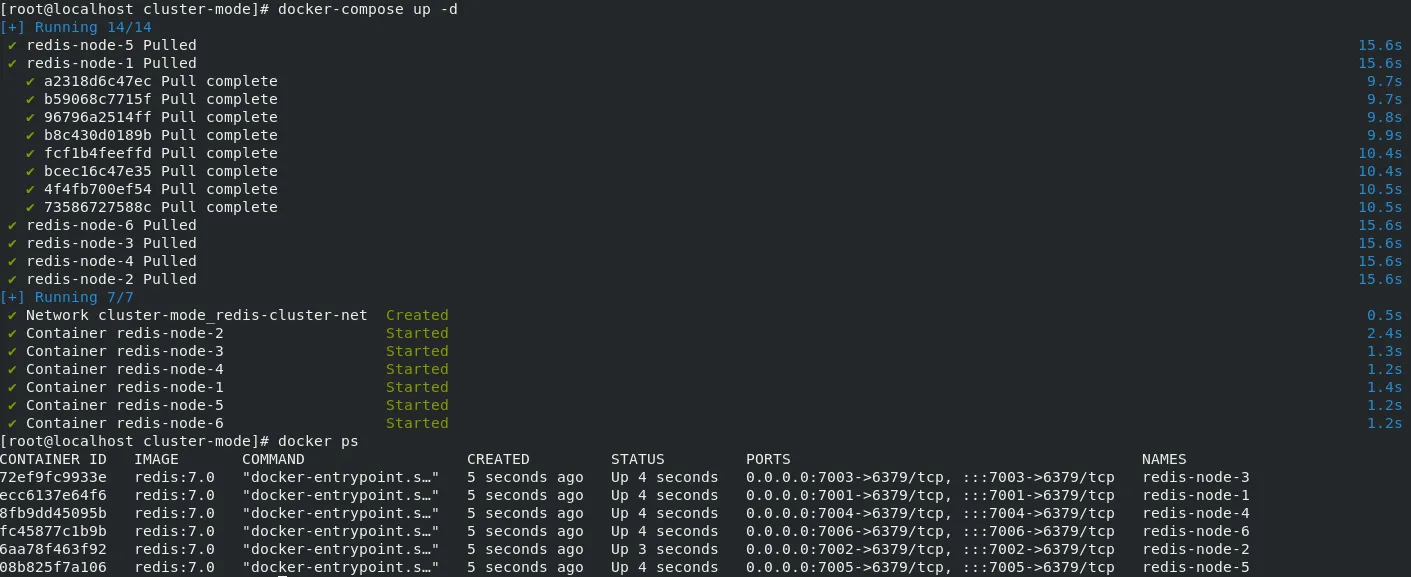

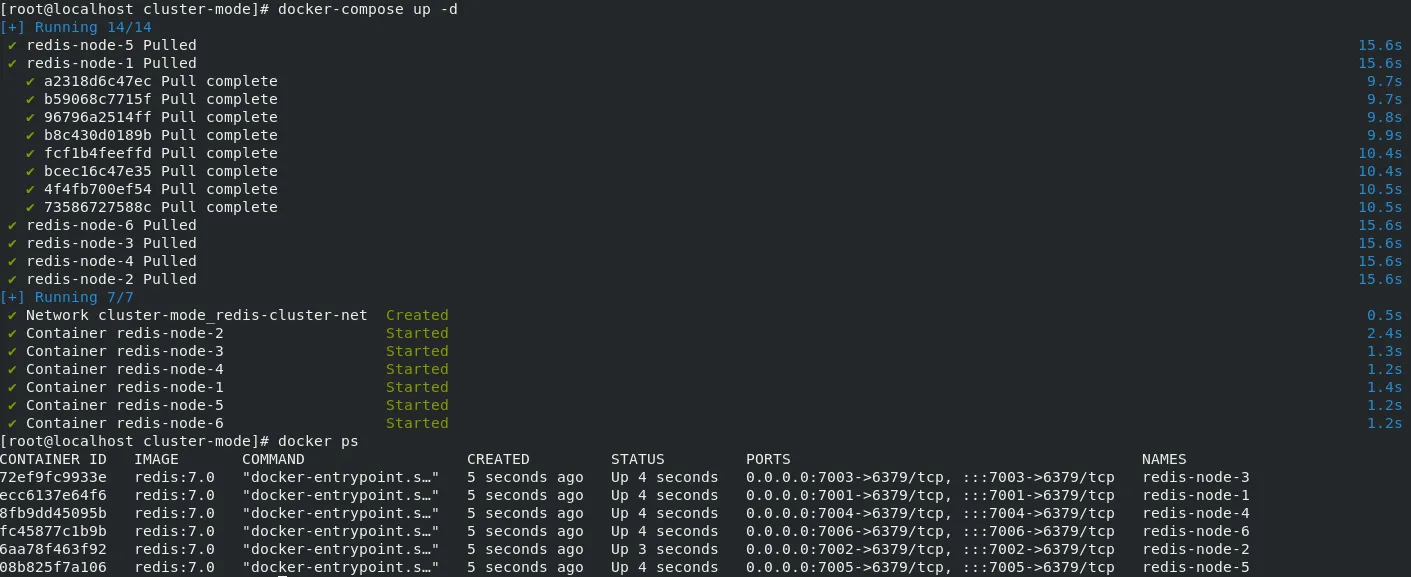

启动集群

启动所有节点

进入任意一个节点,执行下面命令创建 Redis Cluster

1

2

3

4

5

6

7

8

9

|

docker exec -it redis-node-1 redis-cli --cluster create \

172.20.0.2:6379 \

172.20.0.3:6379 \

172.20.0.4:6379 \

172.20.0.5:6379 \

172.20.0.6:6379 \

172.20.0.7:6379 \

--cluster-replicas 1 \

--cluster-yes

|

结果如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

|

$ docker exec -it redis-node-1 redis-cli --cluster create \

> 172.20.0.2:6379 \

> 172.20.0.3:6379 \

> 172.20.0.4:6379 \

> 172.20.0.5:6379 \

> 172.20.0.6:6379 \

> 172.20.0.7:6379 \

> --cluster-replicas 1 \

> --cluster-yes

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.20.0.6:6379 to 172.20.0.2:6379

Adding replica 172.20.0.7:6379 to 172.20.0.3:6379

Adding replica 172.20.0.5:6379 to 172.20.0.4:6379

M: 1d4feacf706e4e0db2f96bc68dcde65662252a57 172.20.0.2:6379

slots:[0-5460] (5461 slots) master

M: ebe345c90dd4b51724d2797bfd44cb5c89f863ec 172.20.0.3:6379

slots:[5461-10922] (5462 slots) master

M: ae9abf26cd6c7162d367519917e8cf217314baaf 172.20.0.4:6379

slots:[10923-16383] (5461 slots) master

S: efc4cade3a75e8e6e7b8f51116c1d20a52b8ad39 172.20.0.5:6379

replicates ae9abf26cd6c7162d367519917e8cf217314baaf

S: e18d1d38fb891a49079944c3ece1589ad39cdb9f 172.20.0.6:6379

replicates 1d4feacf706e4e0db2f96bc68dcde65662252a57

S: 7923a2a719e020a5729d3abb0789eb1464018f5b 172.20.0.7:6379

replicates ebe345c90dd4b51724d2797bfd44cb5c89f863ec

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 172.20.0.2:6379)

M: 1d4feacf706e4e0db2f96bc68dcde65662252a57 172.20.0.2:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: ae9abf26cd6c7162d367519917e8cf217314baaf 172.20.0.4:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: e18d1d38fb891a49079944c3ece1589ad39cdb9f 172.20.0.6:6379

slots: (0 slots) slave

replicates 1d4feacf706e4e0db2f96bc68dcde65662252a57

M: ebe345c90dd4b51724d2797bfd44cb5c89f863ec 172.20.0.3:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: efc4cade3a75e8e6e7b8f51116c1d20a52b8ad39 172.20.0.5:6379

slots: (0 slots) slave

replicates ae9abf26cd6c7162d367519917e8cf217314baaf

S: 7923a2a719e020a5729d3abb0789eb1464018f5b 172.20.0.7:6379

slots: (0 slots) slave

replicates ebe345c90dd4b51724d2797bfd44cb5c89f863ec

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

|

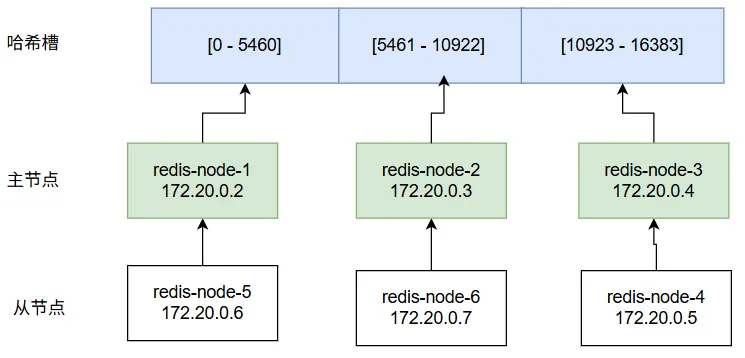

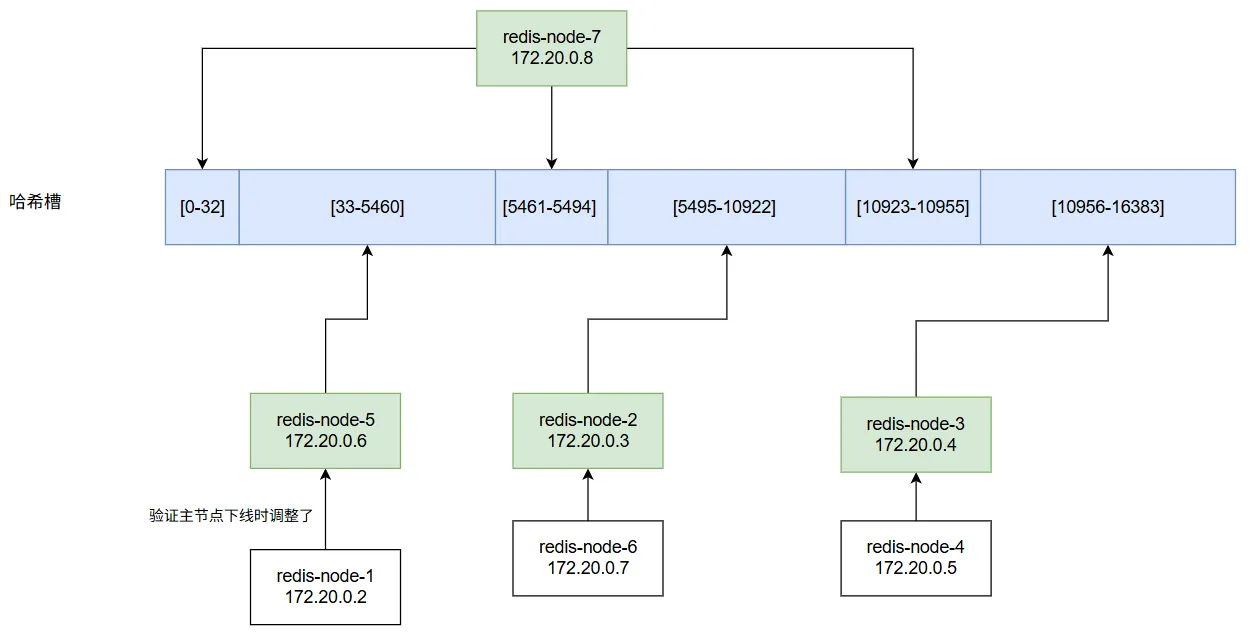

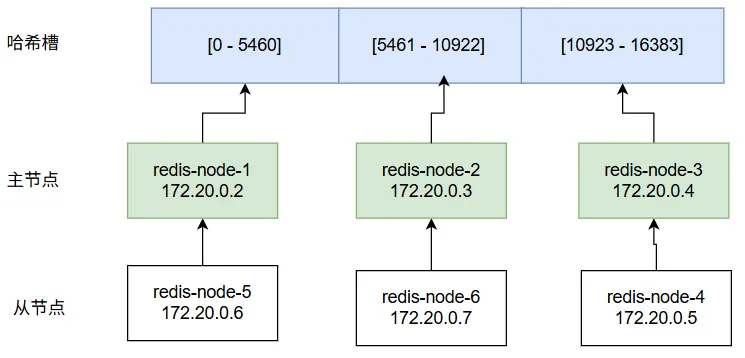

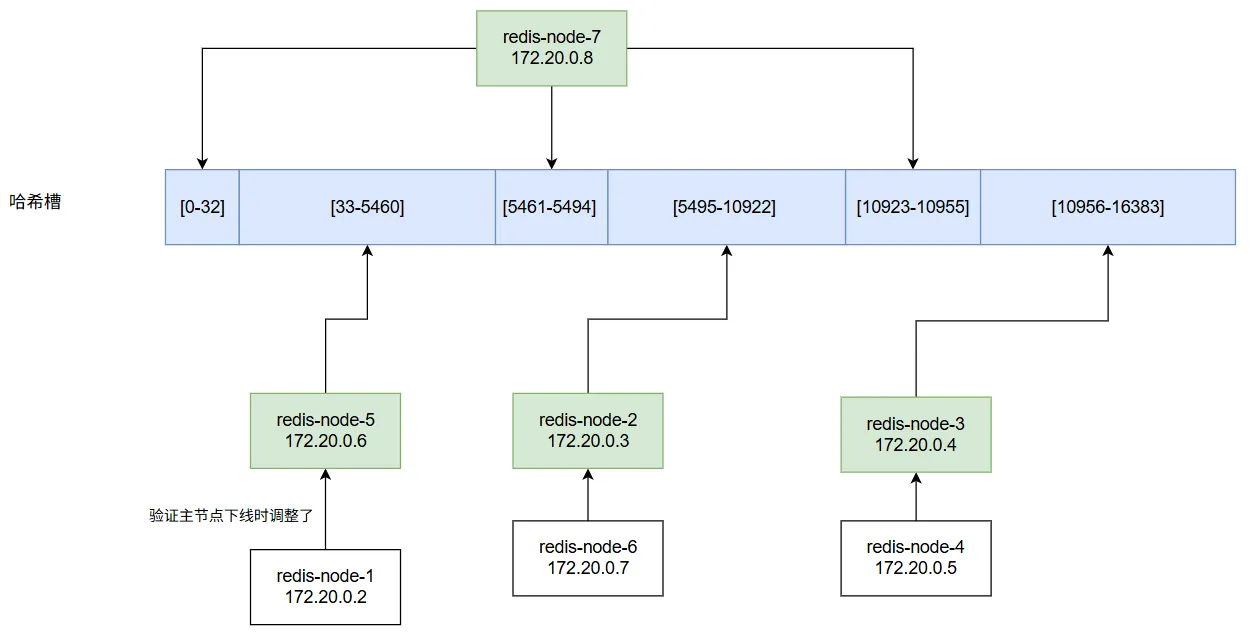

可以看到只有主节点有槽位分配,从节点备份主节点数据,主从关系及槽位分配如下图所示:

特性验证

- 数据分片验证:插入大量数据,观察 key 分布在不同节点

- 节点扩容:添加新节点并迁移哈希槽

- 故障转移:杀死主节点,观察副本节点接管

- 跨槽操作:测试

**MGET** 跨多个槽的报错行为

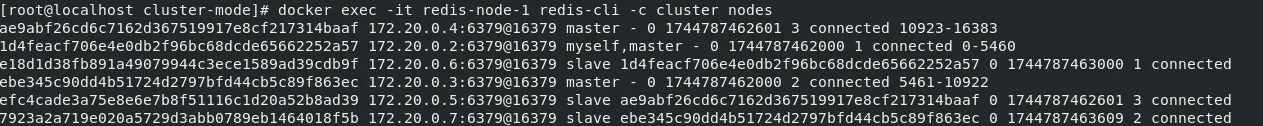

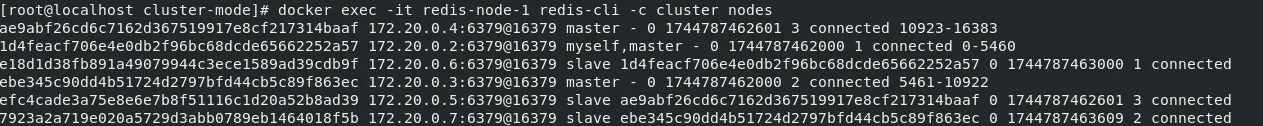

验证1:检查集群状态

1

|

docker exec -it redis-node-1 redis-cli -c cluster nodes

|

输出如下

1

2

3

4

5

6

7

|

$ docker exec -it redis-node-1 redis-cli -c cluster nodes

ae9abf26cd6c7162d367519917e8cf217314baaf 172.20.0.4:6379@16379 master - 0 1744787462601 3 connected 10923-16383

1d4feacf706e4e0db2f96bc68dcde65662252a57 172.20.0.2:6379@16379 myself,master - 0 1744787462000 1 connected 0-5460

e18d1d38fb891a49079944c3ece1589ad39cdb9f 172.20.0.6:6379@16379 slave 1d4feacf706e4e0db2f96bc68dcde65662252a57 0 1744787463000 1 connected

ebe345c90dd4b51724d2797bfd44cb5c89f863ec 172.20.0.3:6379@16379 master - 0 1744787462000 2 connected 5461-10922

efc4cade3a75e8e6e7b8f51116c1d20a52b8ad39 172.20.0.5:6379@16379 slave ae9abf26cd6c7162d367519917e8cf217314baaf 0 1744787462601 3 connected

7923a2a719e020a5729d3abb0789eb1464018f5b 172.20.0.7:6379@16379 slave ebe345c90dd4b51724d2797bfd44cb5c89f863ec 0 1744787463609 2 connected

|

输出含义

1

2

|

# 格式说明

[节点ID] [IP:端口@集群总线端口] [角色] [主节点ID] [PING发送时间] [PONG接收时间] [配置纪元] [连接状态] [槽位分配]

|

举例说明

| 字段 |

说明 |

| 节点ID |

ae9abf26cd6c7162d367519917e8cf217314baaf(唯一标识) |

| 服务地址 |

172.20.0.4:6379 (客户端连接地址) |

| 集群总线端口 |

16379(节点间通信端口) |

| 角色 |

master (主节点) |

| 主节点ID |

- (主节点无上级节点) |

| 配置纪元 |

3(集群配置版本号) |

| 槽位分配 |

10923-16383(管理5461个槽位) |

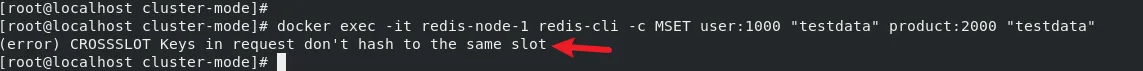

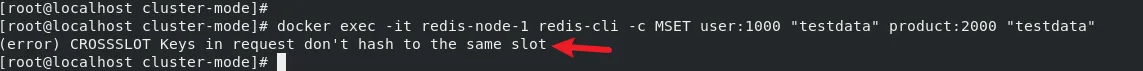

验证2. 写入跨槽位数据

Redis Cluster 通过哈希槽分片(16384 slots) 管理数据分布,要求单命令中的多个键必须属于同一槽位。若违反此规则,将出现以下典型表现:

1、多键操作被拒绝

1

2

3

|

# 尝试写入跨槽位的键(key1 在 slot1,key2 在 slot2)

$ docker exec -it redis-node-1 redis-cli -c MSET user:1000 "testdata" product:2000 "testdata"

(error) CROSSSLOT Keys in request don't hash to the same slot

|

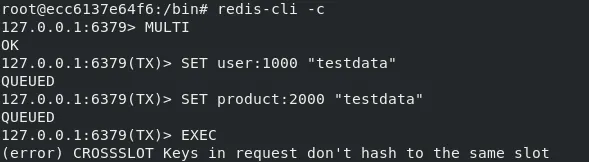

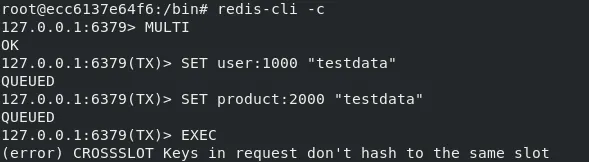

2、事务(MULTI/EXEC)中断

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

# 先进入主节点容器

$ docker exec -it redis-node-1 /bin/bash

$ cd /bin

$ redis-cli -c

# 事务中包含跨槽位操作

127.0.0.1:6379> MULTI

OK

127.0.0.1:6379> SET user:1000 "testdata"

QUEUED

127.0.0.1:6379> SET product:2000 "testdata"

QUEUED

127.0.0.1:6379> EXEC

(error) EXECABORT Transaction discarded because of previous errors

|

事务中的命令在预检阶段发现跨槽位操作,最后事务完全回滚,所有命令均不执行。

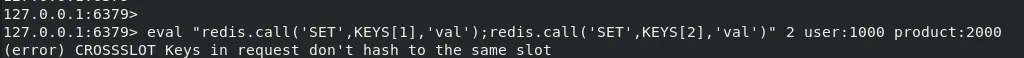

3、Lua 脚本受限

1

2

3

|

# 脚本中使用跨槽位的键

$ eval "redis.call('SET',KEYS[1],'val');redis.call('SET',KEYS[2],'val')" 2 user:1000 product:2000

(error) CROSSSLOT Keys in request don't hash to the same slot

|

Lua 脚本中所有操作的键必须属于同一槽位(除非使用 {hash tag})

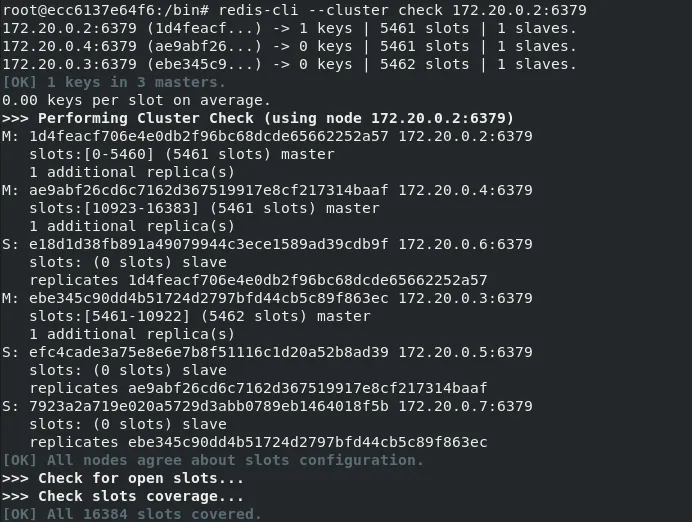

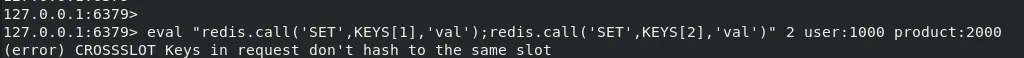

验证3. 槽位分布验证

进入任意一个节点,输入下面命令可以看到当前集群的槽位分布

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

$ redis-cli --cluster check 172.20.0.2:6379

172.20.0.2:6379 (1d4feacf...) -> 1 keys | 5461 slots | 1 slaves.

172.20.0.4:6379 (ae9abf26...) -> 0 keys | 5461 slots | 1 slaves.

172.20.0.3:6379 (ebe345c9...) -> 0 keys | 5462 slots | 1 slaves.

[OK] 1 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 172.20.0.2:6379)

M: 1d4feacf706e4e0db2f96bc68dcde65662252a57 172.20.0.2:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: ae9abf26cd6c7162d367519917e8cf217314baaf 172.20.0.4:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: e18d1d38fb891a49079944c3ece1589ad39cdb9f 172.20.0.6:6379

slots: (0 slots) slave

replicates 1d4feacf706e4e0db2f96bc68dcde65662252a57

M: ebe345c90dd4b51724d2797bfd44cb5c89f863ec 172.20.0.3:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: efc4cade3a75e8e6e7b8f51116c1d20a52b8ad39 172.20.0.5:6379

slots: (0 slots) slave

replicates ae9abf26cd6c7162d367519917e8cf217314baaf

S: 7923a2a719e020a5729d3abb0789eb1464018f5b 172.20.0.7:6379

slots: (0 slots) slave

replicates ebe345c90dd4b51724d2797bfd44cb5c89f863ec

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

|

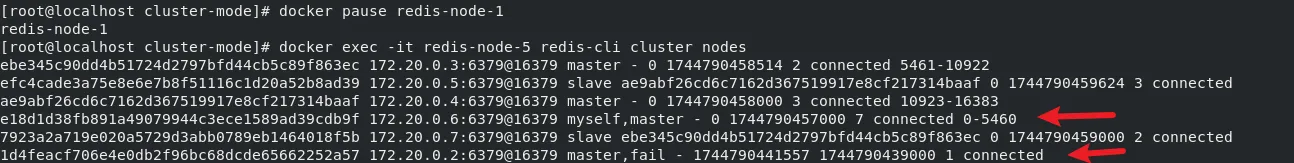

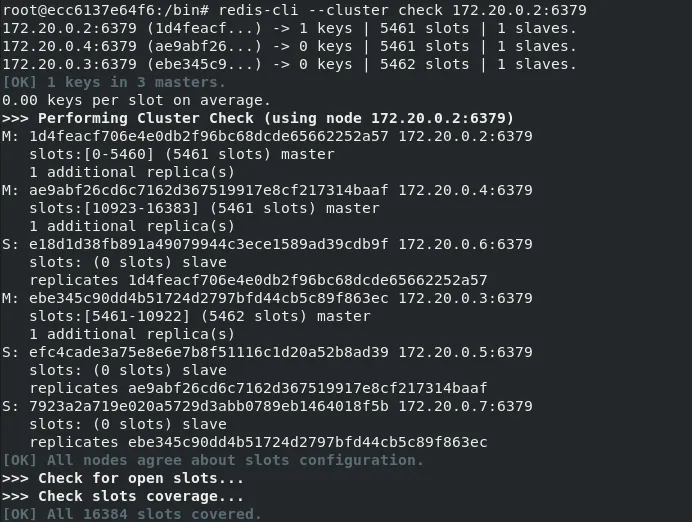

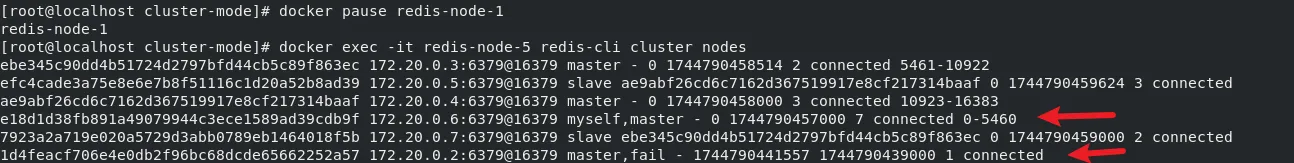

验证4. 主从切换测试

1

2

3

4

5

|

# 暂停节点

docker pause redis-node-1

# 观察从节点晋升(约15秒后)

docker exec -it redis-node-5 redis-cli cluster nodes

|

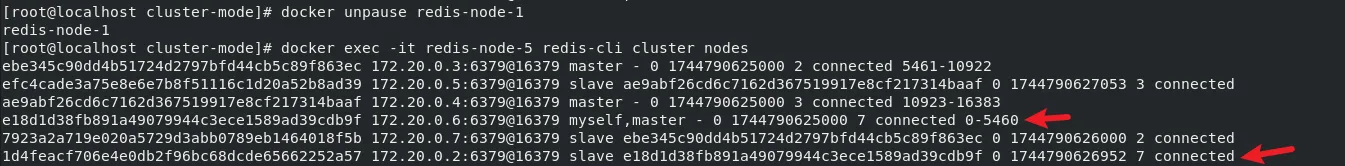

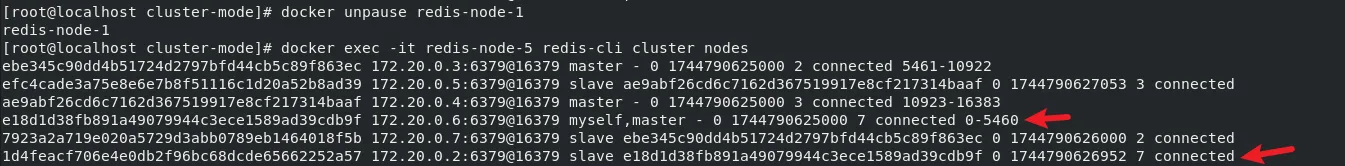

从输出可以看到从节点 redis-node-5 晋升成为了主节点

1

2

3

4

5

6

7

|

$ docker exec -it redis-node-5 redis-cli cluster nodes

ebe345c90dd4b51724d2797bfd44cb5c89f863ec 172.20.0.3:6379@16379 master - 0 1744790458514 2 connected 5461-10922

efc4cade3a75e8e6e7b8f51116c1d20a52b8ad39 172.20.0.5:6379@16379 slave ae9abf26cd6c7162d367519917e8cf217314baaf 0 1744790459624 3 connected

ae9abf26cd6c7162d367519917e8cf217314baaf 172.20.0.4:6379@16379 master - 0 1744790458000 3 connected 10923-16383

e18d1d38fb891a49079944c3ece1589ad39cdb9f 172.20.0.6:6379@16379 myself,master - 0 1744790457000 7 connected 0-5460

7923a2a719e020a5729d3abb0789eb1464018f5b 172.20.0.7:6379@16379 slave ebe345c90dd4b51724d2797bfd44cb5c89f863ec 0 1744790459000 2 connected

1d4feacf706e4e0db2f96bc68dcde65662252a57 172.20.0.2:6379@16379 master,fail - 1744790441557 1744790439000 1 connected

|

当 redis-node-1 重新上线,将会变成 redis-node-5 的从节点

1

2

3

4

5

6

7

|

$ docker exec -it redis-node-5 redis-cli cluster nodes

ebe345c90dd4b51724d2797bfd44cb5c89f863ec 172.20.0.3:6379@16379 master - 0 1744790625000 2 connected 5461-10922

efc4cade3a75e8e6e7b8f51116c1d20a52b8ad39 172.20.0.5:6379@16379 slave ae9abf26cd6c7162d367519917e8cf217314baaf 0 1744790627053 3 connected

ae9abf26cd6c7162d367519917e8cf217314baaf 172.20.0.4:6379@16379 master - 0 1744790625000 3 connected 10923-16383

e18d1d38fb891a49079944c3ece1589ad39cdb9f 172.20.0.6:6379@16379 myself,master - 0 1744790625000 7 connected 0-5460

7923a2a719e020a5729d3abb0789eb1464018f5b 172.20.0.7:6379@16379 slave ebe345c90dd4b51724d2797bfd44cb5c89f863ec 0 1744790626000 2 connected

1d4feacf706e4e0db2f96bc68dcde65662252a57 172.20.0.2:6379@16379 slave e18d1d38fb891a49079944c3ece1589ad39cdb9f 0 1744790626952 7 connected

|

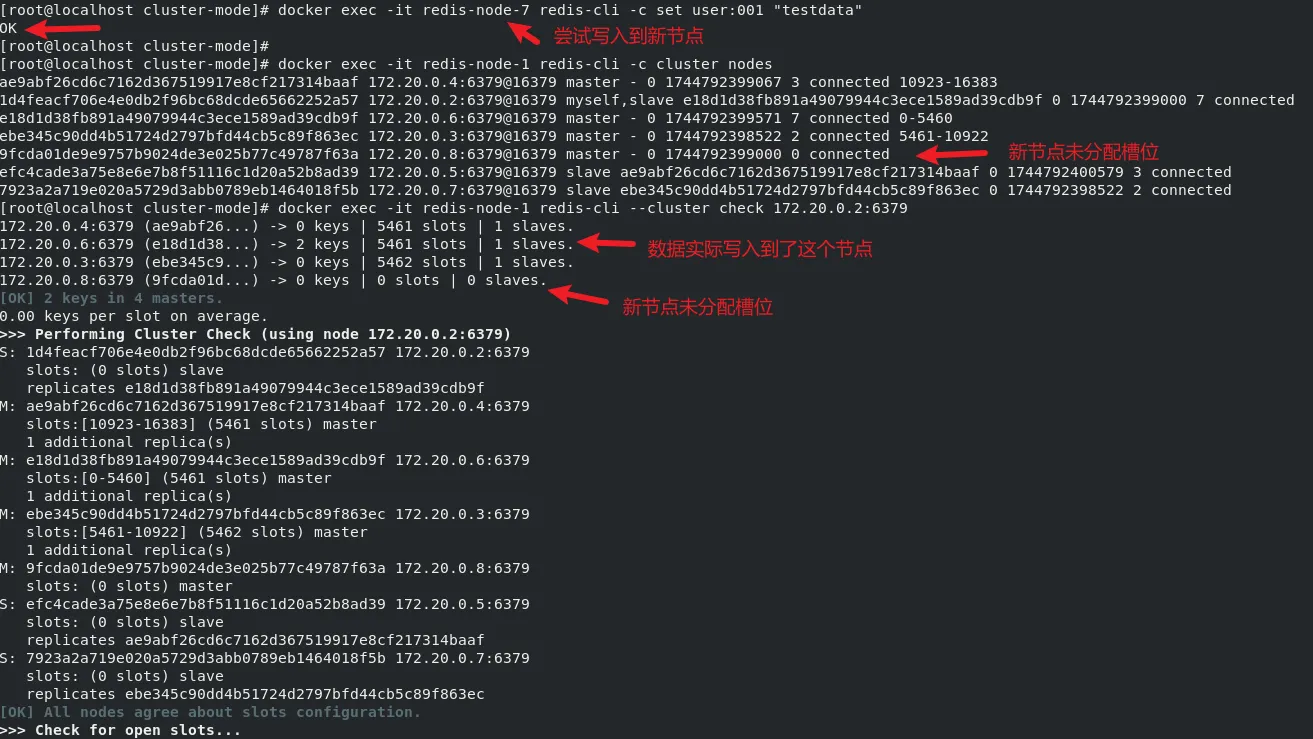

验证5. 集群扩容测试

创建目录并启动新节点

1

2

3

4

5

|

mkdir -p ./data/node7

# 仅启动 redis-node-7

docker-compose -f docker-compose-scale.yml up -d redis-node-7

# 验证节点状态,应返回 PONG

docker exec -it redis-node-7 redis-cli ping

|

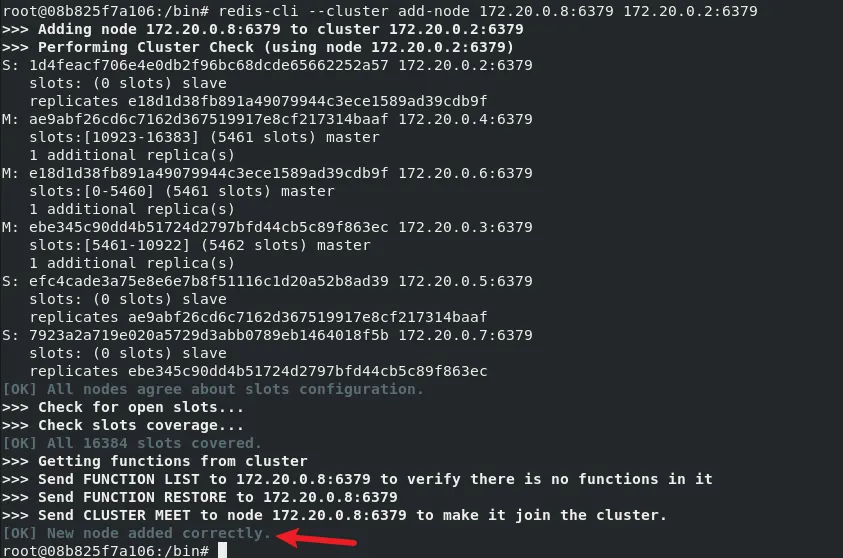

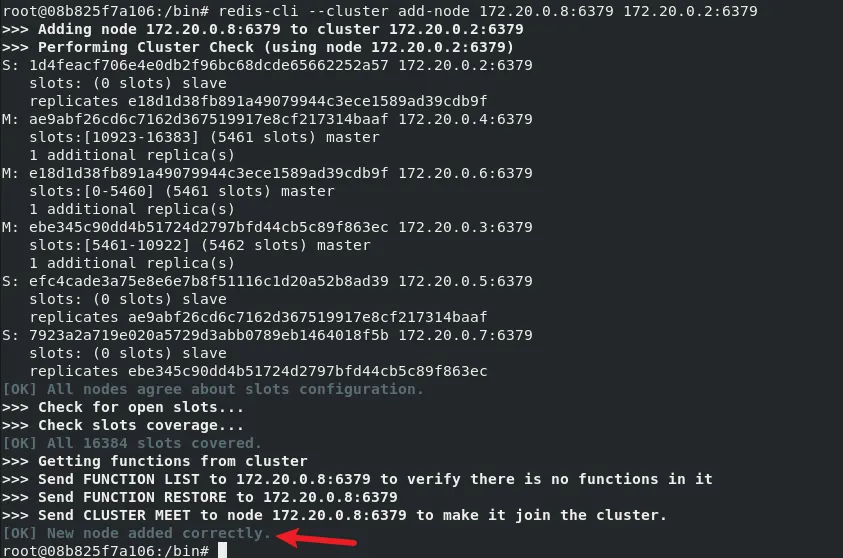

加入集群,有以下两种方式

1

2

3

4

5

|

# 将新节点作为主节点加入(暂未分配槽位)

redis-cli --cluster add-node 172.20.0.8:6379 172.20.0.2:6379

# 将新节点作为从节点加入(需指定主节点ID)

redis-cli --cluster add-node 172.20.0.8:6379 172.20.0.2:6379 --cluster-slave --cluster-master-id <主节点ID>

|

语法如下

1

2

3

4

|

redis-cli --cluster add-node <新节点IP:端口> <已知集群节点IP:端口>

redis-cli --cluster add-node 新节点IP:端口 已知节点IP:端口 \

--cluster-slave \

--cluster-master-id <目标主节点ID>

|

采用第一种,新节点作为主节点加入集群

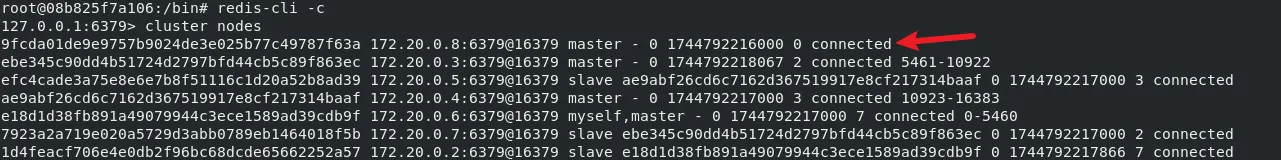

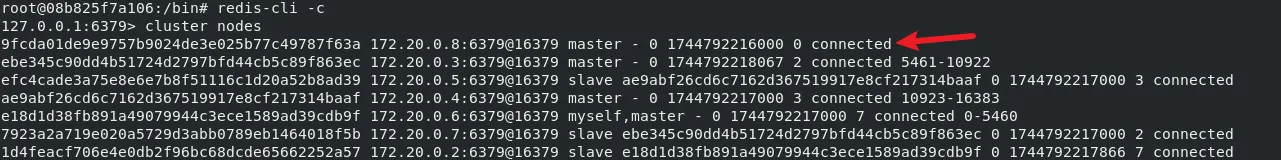

可以看到新节点没有分配槽位:

1

2

3

4

5

6

7

8

|

127.0.0.1:6379> cluster nodes

9fcda01de9e9757b9024de3e025b77c49787f63a 172.20.0.8:6379@16379 master - 0 1744792216000 0 connected

ebe345c90dd4b51724d2797bfd44cb5c89f863ec 172.20.0.3:6379@16379 master - 0 1744792218067 2 connected 5461-10922

efc4cade3a75e8e6e7b8f51116c1d20a52b8ad39 172.20.0.5:6379@16379 slave ae9abf26cd6c7162d367519917e8cf217314baaf 0 1744792217000 3 connected

ae9abf26cd6c7162d367519917e8cf217314baaf 172.20.0.4:6379@16379 master - 0 1744792217000 3 connected 10923-16383

e18d1d38fb891a49079944c3ece1589ad39cdb9f 172.20.0.6:6379@16379 myself,master - 0 1744792217000 7 connected 0-5460

7923a2a719e020a5729d3abb0789eb1464018f5b 172.20.0.7:6379@16379 slave ebe345c90dd4b51724d2797bfd44cb5c89f863ec 0 1744792217000 2 connected

1d4feacf706e4e0db2f96bc68dcde65662252a57 172.20.0.2:6379@16379 slave e18d1d38fb891a49079944c3ece1589ad39cdb9f 0 1744792217866 7 connected

|

尝试往新节点中写数据,发现能够写入,但实际只是 MOVED 到并写入到了其他的主节点

1

|

$ docker exec -it redis-node-8 redis-cli -c set user:001 "testdata"

|

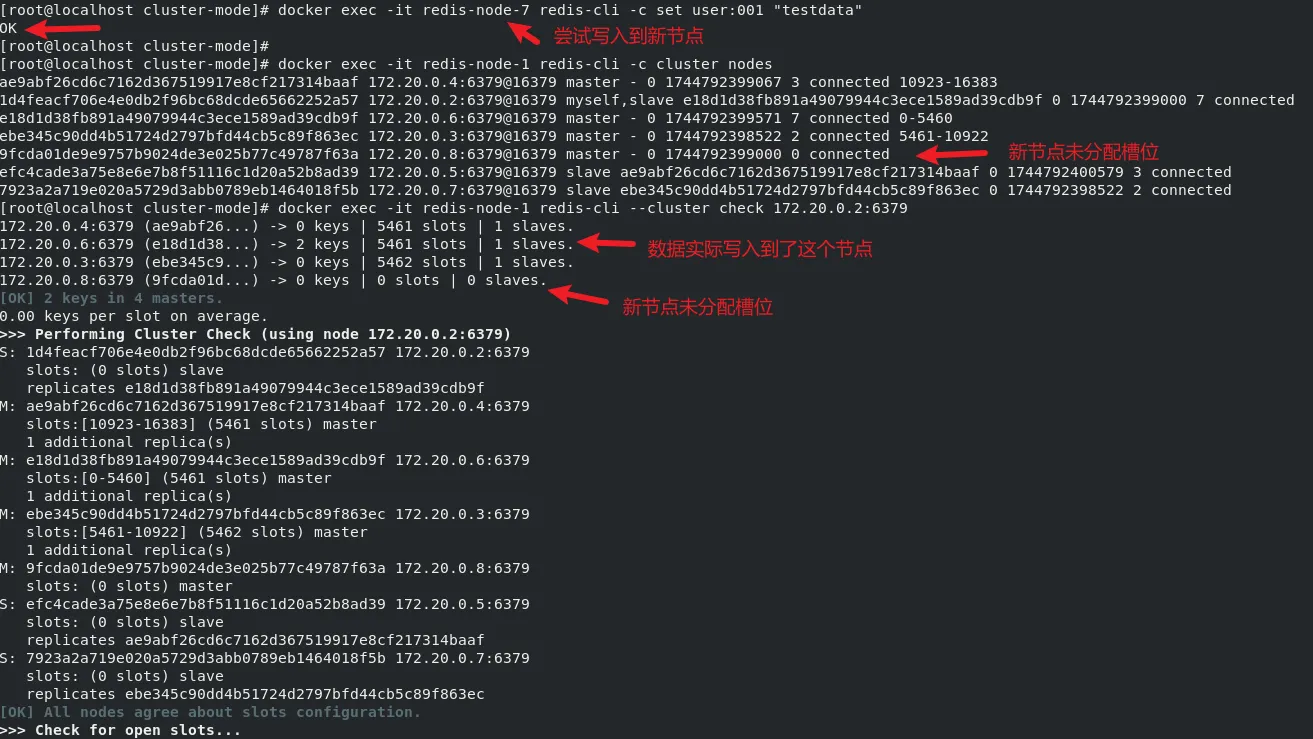

分配槽位

1

2

3

|

# 2. 分配槽位给新节点

redis-cli --cluster reshard 172.20.0.2:6379

# 交互式操作选择迁移槽位数和源节点

|

语法:

1

|

redis-cli --cluster reshard <任意集群节点IP:端口>

|

交互式配置

| 交互问题 |

正确回答方式 |

| 要移动多少槽位? |

输入需要迁移的槽位总数(如 1000) |

| 接收节点ID是什么? |

输入目标节点的 ID(可通过 cluster nodes查询) |

| 从哪些节点迁移数据? |

输入: all:从所有节点均摊迁移 指定节点ID:从特定节点迁移 |

| 确认迁移计划? |

输入 yes 开始执行 |

执行重新分配:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

|

$ redis-cli --cluster reshard 172.20.0.2:6379

>>> Performing Cluster Check (using node 172.20.0.2:6379)

S: 1d4feacf706e4e0db2f96bc68dcde65662252a57 172.20.0.2:6379

slots: (0 slots) slave

replicates e18d1d38fb891a49079944c3ece1589ad39cdb9f

M: ae9abf26cd6c7162d367519917e8cf217314baaf 172.20.0.4:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: e18d1d38fb891a49079944c3ece1589ad39cdb9f 172.20.0.6:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: ebe345c90dd4b51724d2797bfd44cb5c89f863ec 172.20.0.3:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 9fcda01de9e9757b9024de3e025b77c49787f63a 172.20.0.8:6379

slots: (0 slots) master

S: efc4cade3a75e8e6e7b8f51116c1d20a52b8ad39 172.20.0.5:6379

slots: (0 slots) slave

replicates ae9abf26cd6c7162d367519917e8cf217314baaf

S: 7923a2a719e020a5729d3abb0789eb1464018f5b 172.20.0.7:6379

slots: (0 slots) slave

replicates ebe345c90dd4b51724d2797bfd44cb5c89f863ec

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 100

What is the receiving node ID? 9fcda01de9e9757b9024de3e025b77c49787f63a

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: all

Ready to move 100 slots.

Source nodes:

M: ae9abf26cd6c7162d367519917e8cf217314baaf 172.20.0.4:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: e18d1d38fb891a49079944c3ece1589ad39cdb9f 172.20.0.6:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: ebe345c90dd4b51724d2797bfd44cb5c89f863ec 172.20.0.3:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

Destination node:

M: 9fcda01de9e9757b9024de3e025b77c49787f63a 172.20.0.8:6379

slots: (0 slots) master

Resharding plan:

Moving slot 5461 from ebe345c90dd4b51724d2797bfd44cb5c89f863ec

Moving slot 5462 from ebe345c90dd4b51724d2797bfd44cb5c89f863ec

...

Do you want to proceed with the proposed reshard plan (yes/no)? yes

Moving slot 5461 from 172.20.0.3:6379 to 172.20.0.8:6379:

Moving slot 5462 from 172.20.0.3:6379 to 172.20.0.8:6379:

Moving slot 5463 from 172.20.0.3:6379 to 172.20.0.8:6379:

Moving slot 5464 from 172.20.0.3:6379 to 172.20.0.8:6379:

Moving slot 5465 from 172.20.0.3:6379 to 172.20.0.8:6379:

...

|

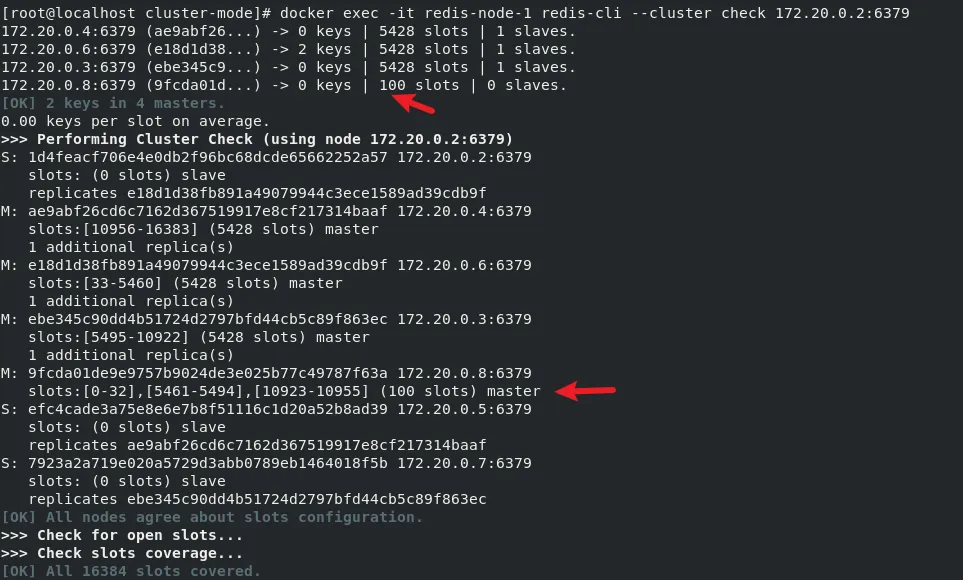

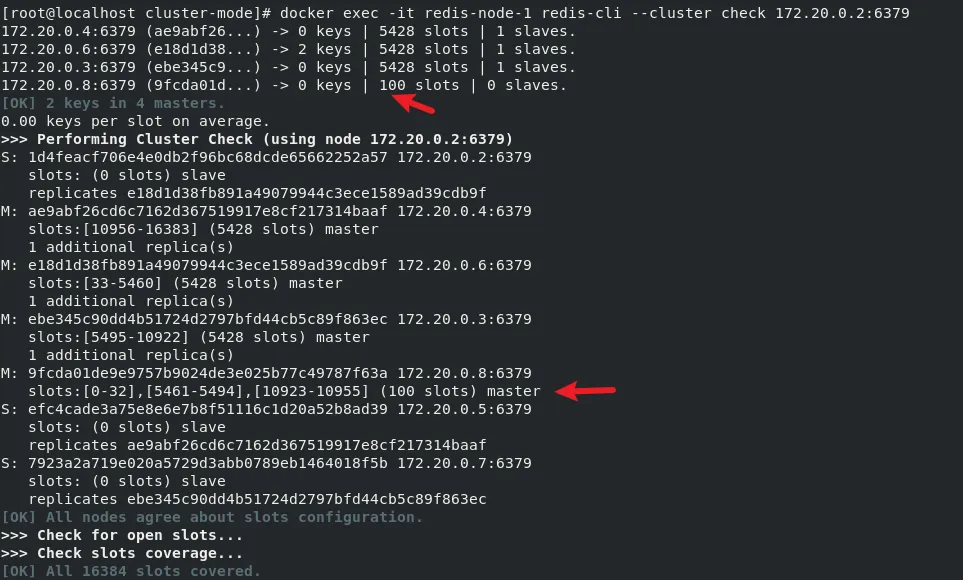

查看主节点槽位分配情况

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

docker exec -it redis-node-1 redis-cli --cluster check 172.20.0.2:6379

172.20.0.4:6379 (ae9abf26...) -> 0 keys | 5428 slots | 1 slaves.

172.20.0.6:6379 (e18d1d38...) -> 2 keys | 5428 slots | 1 slaves.

172.20.0.3:6379 (ebe345c9...) -> 0 keys | 5428 slots | 1 slaves.

172.20.0.8:6379 (9fcda01d...) -> 0 keys | 100 slots | 0 slaves.

[OK] 2 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 172.20.0.2:6379)

S: 1d4feacf706e4e0db2f96bc68dcde65662252a57 172.20.0.2:6379

slots: (0 slots) slave

replicates e18d1d38fb891a49079944c3ece1589ad39cdb9f

M: ae9abf26cd6c7162d367519917e8cf217314baaf 172.20.0.4:6379

slots:[10956-16383] (5428 slots) master

1 additional replica(s)

M: e18d1d38fb891a49079944c3ece1589ad39cdb9f 172.20.0.6:6379

slots:[33-5460] (5428 slots) master

1 additional replica(s)

M: ebe345c90dd4b51724d2797bfd44cb5c89f863ec 172.20.0.3:6379

slots:[5495-10922] (5428 slots) master

1 additional replica(s)

M: 9fcda01de9e9757b9024de3e025b77c49787f63a 172.20.0.8:6379

slots:[0-32],[5461-5494],[10923-10955] (100 slots) master

S: efc4cade3a75e8e6e7b8f51116c1d20a52b8ad39 172.20.0.5:6379

slots: (0 slots) slave

replicates ae9abf26cd6c7162d367519917e8cf217314baaf

S: 7923a2a719e020a5729d3abb0789eb1464018f5b 172.20.0.7:6379

slots: (0 slots) slave

replicates ebe345c90dd4b51724d2797bfd44cb5c89f863ec

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

|

目前的节点关系和槽位分配如下图所示:

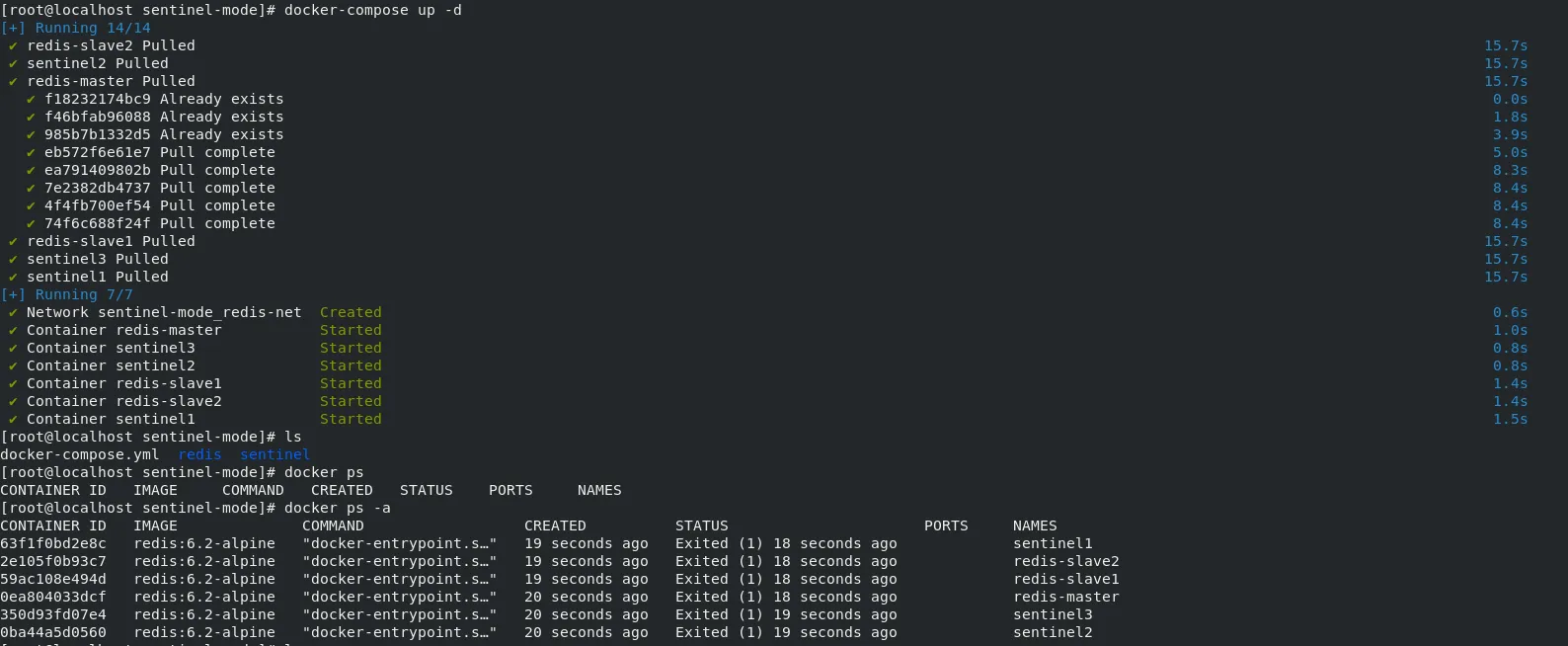

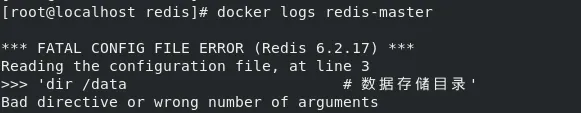

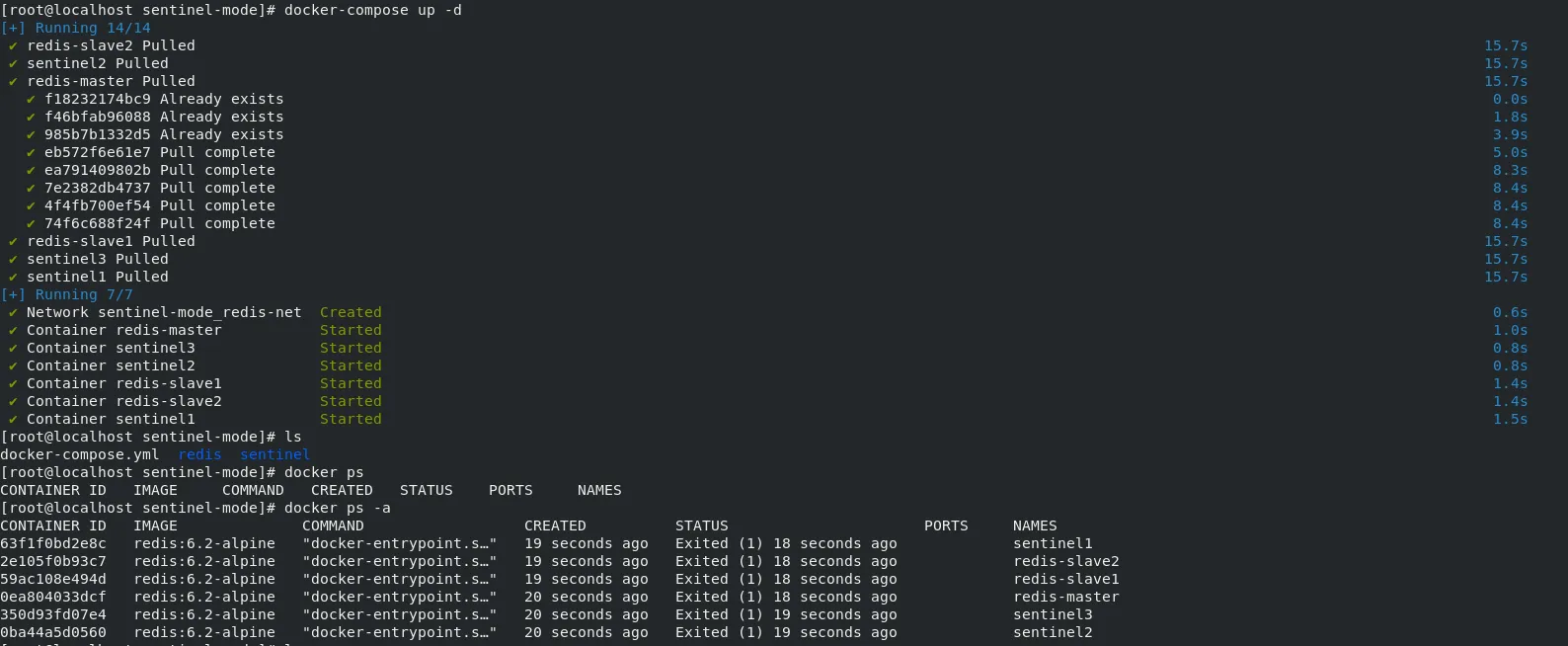

三、遇到的问题

行内注释导致集群启动失败

构建集群后发现容器没启动成功

通过查看容器日志发现可能是行内注释导致的,

Redis 配置文件 严格不支持行内注释,所有以 # 开头的注释必须独占一行。配置文件中出现了类似这样的错误写法:

1

|

dir /data # 数据存储目录 ← 错误!注释不能跟在配置项后面

|

需要改成

1

2

|

# 数据存储目录 ← 错误!注释不能跟在配置项后面

dir /data

|

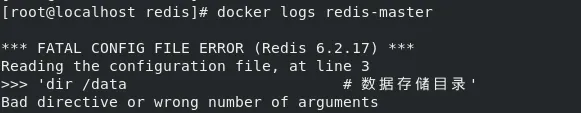

集群启动时哨兵启动失败

一开始使用 redis:alpine 镜像,启动集群时,发现 master、slave1、slave2 都能正常启动,但是三个哨兵节点都启动失败,通过 docker logs sentinel1发现如下报错信息

1

2

3

4

5

6

7

|

[root@localhost sentinel-mode]# docker-compose logs --tail=100 sentinel1

sentinel1 | 1:X 15 Apr 2025 08:03:25.961 # Failed to resolve hostname 'redis-master'

sentinel1 |

sentinel1 | *** FATAL CONFIG FILE ERROR (Redis 6.2.17) ***

sentinel1 | Reading the configuration file, at line 2

sentinel1 | >>> 'sentinel monitor mymaster redis-master 6379 2'

sentinel1 | Can't resolve instance hostname.

|

看着像是哨兵节点尝试连接主节点的时候发现无法通过 redis-master 解析出主节点的 ip,通过这个博客发现,只有 6.2 以上版本的 sentinel 才能解析主机名,但默认不启用。需要添加

1

2

|

sentinel resolve-hostnames yes

sentinel announce-hostname yes # Redis 7.0+ 改为 sentinel announce-hostname

|